Todd Mostak is the CTO and co-founder of HEAVY.AI. The firm produces AI-powered, GPU database and visualisation software and has previously traded under the MapD and OmniSci brands.

Todd spent the late 2000s and early 2010s studying Arabic in Syria and Egypt. Todd also was an accomplished C++ developer and early adopter of Nvidia's CUDA language. Todd used these skills to build a GPU database that could analyse the flood of data appearing on Twitter when the Arab Spring kicked off.

By 2013, Todd was back in California and launched MapD. One of the earliest databases to be powered by both Nvidia's GPUs as well as conventional CPUs. Today the firm employs 50 staff members and has done well selling its software to telcos, energy firms and the US Federal Government. Some of HEAVY.AI's clients use their offering to analyse tens of billions of records.

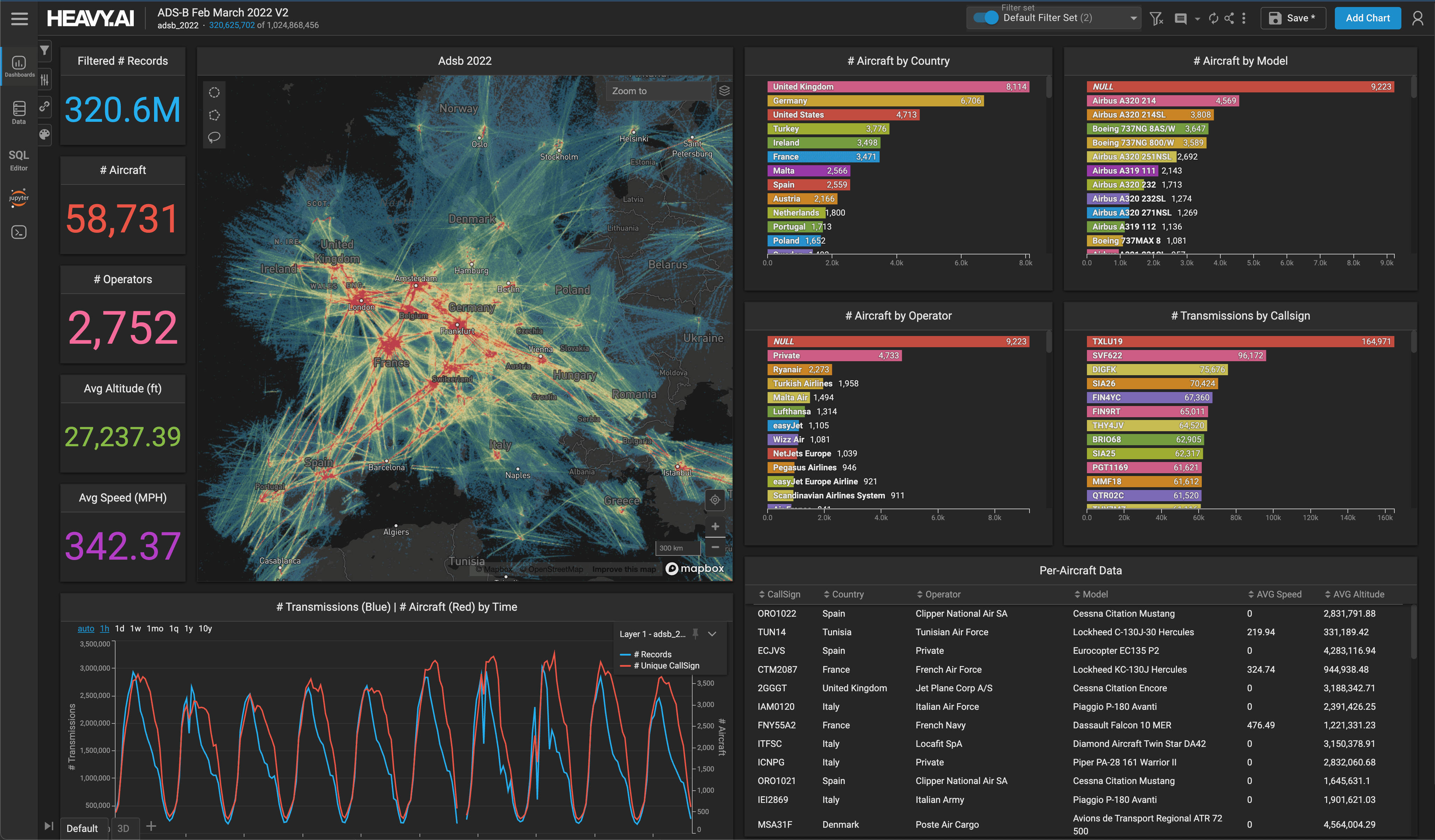

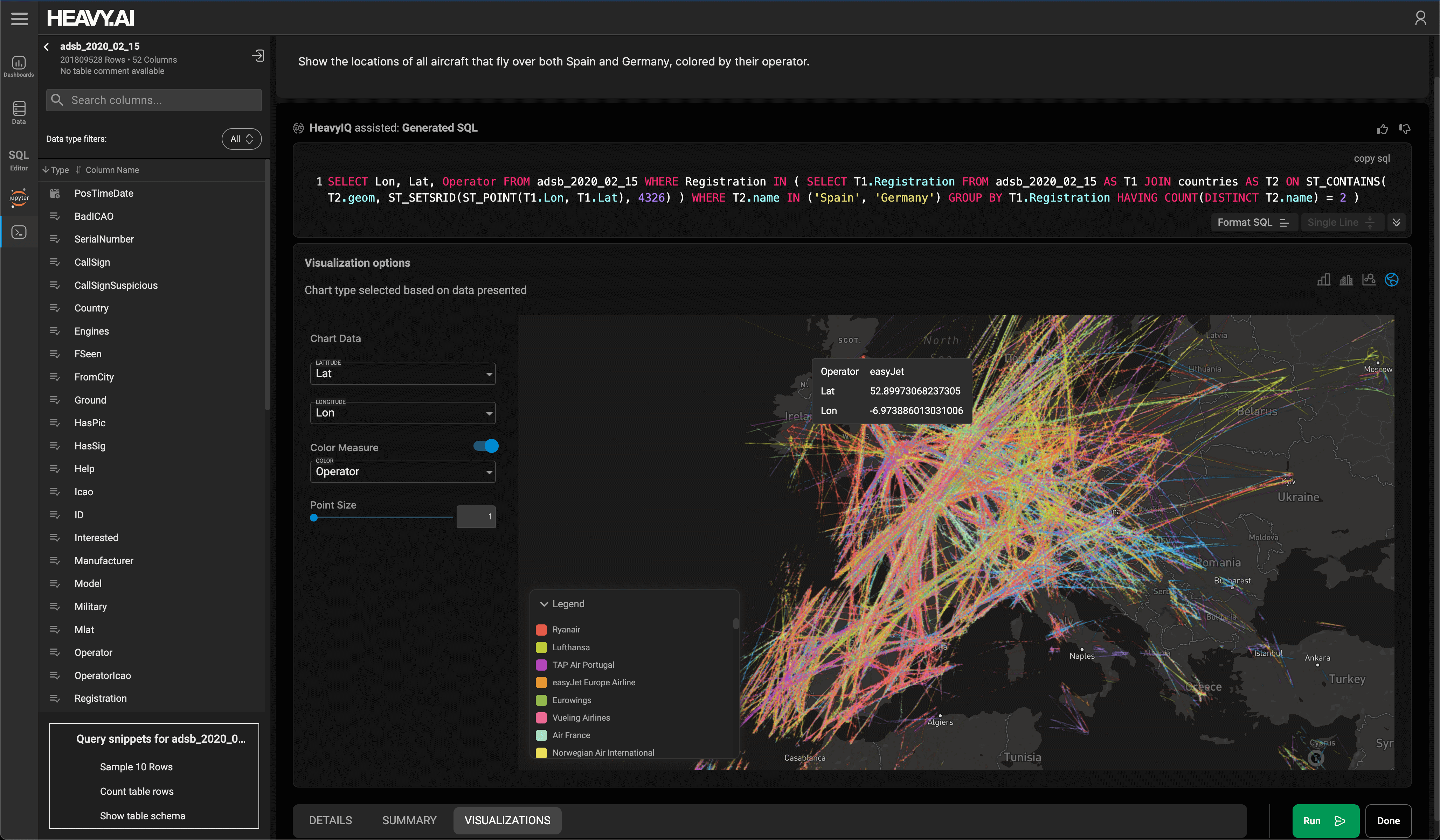

Below is a screenshot from HEAVY.AI's dashboarding interface.

In 2017, I published a blog on how to compile their newly open-sourced database engine and in 2020, I benchmarked their CPU-powered macOS offering. Earlier this year they launched HeavyIQ, an AI-powered, English-to-SQL interface for exploring datasets both large and small on HEAVY.AI.

A Large Language Model

SQL is the most popular to communicate with databases but isn't always the easiest to write. I've been writing SQL statements since the 1990s and even in 2024, I can find myself needing to refer to documentation and spending 30 minutes or more getting more complex statements to run as I wish.

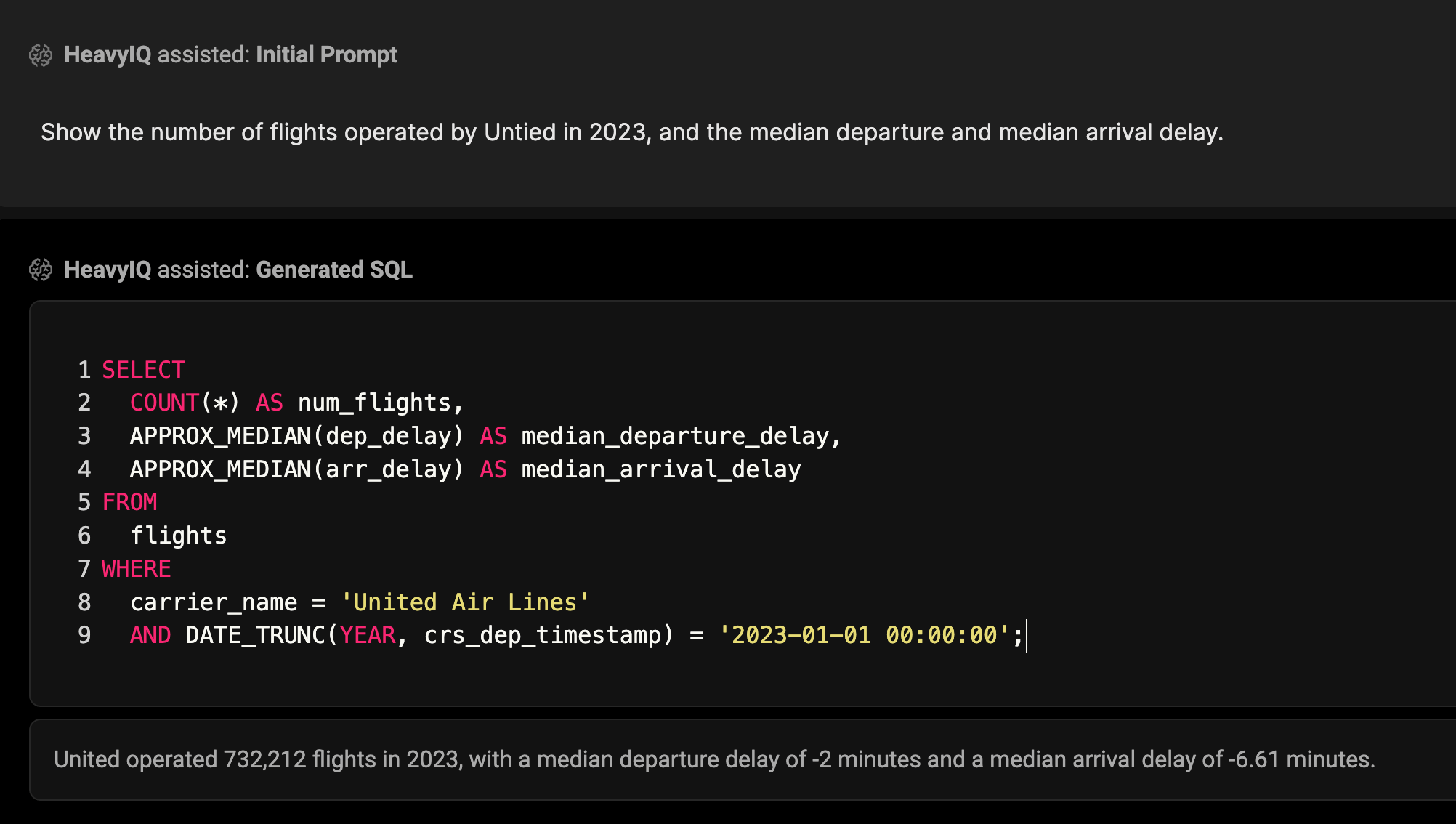

Large Language Models (LLMs) are a form of Artificial Intelligence (AI) that can understand English and act on prompts / commands written out by the user. HEAVY.AI has trained a state-of-the-art LLM that can produce SQL from questions and commands written in plain English, execute said SQL on HeavyDB, their GPU-accelerated database and visualise the results in Immerse, their dashboarding and visualisation environment.

This means you can ask questions in English, explore the results visually and interact with them using mouse clicks. This lowers the level of effort and training needed dramatically.

In addition, HeavyIQ can explain the results of a query. Below a prompt was given, SQL was generated and executed and an English-language one-liner was returned.

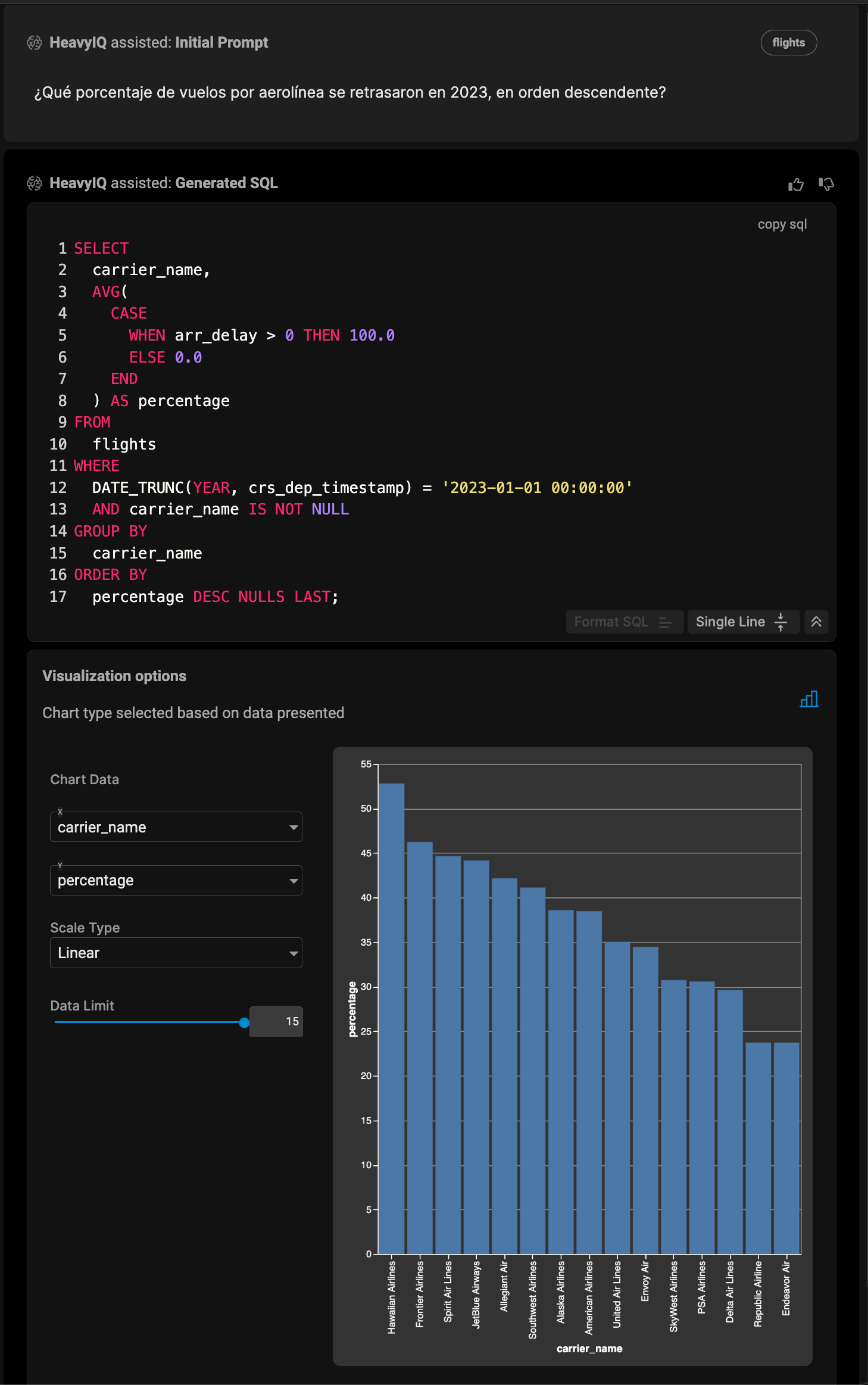

HeavyIQ was built upon fine-tuning Meta's 70-billion parameter, Llama-3 model so it can speak languages other than English, like Russian, Spanish and Italian.

Below HeavyIQ is being prompted with "¿Qué porcentaje de vuelos por aerolínea se retrasaron en 2023, en orden descendente?" which is Spanish for "What percentage of flights by airline were delayed in 2023, in descending order?".

HeavyIQ also has co-pilot functionality. It can generate lists of questions based on your data. Also, if there are any mistakes in SQL you've written out, it'll suggest fixes.

The most popular LLMs are hosted by the companies that have built them. This means these companies can potentially see your commercially sensitive datasets and see what you're working on as in realtime as you prompt their model.

But in the case of HeavyIQ, if you've bought a license, the model lives within your own infrastructure. Your prompts to HeavyIQ won't interact with HEAVY.AI's infrastructure in any way. This also means HeavyIQ can run in an air-gapped environment with no connection to the Internet.

There is also a free version of HEAVY.AI that can be installed on your own infrastructure. The only major difference with this version is that the model is hosted on HEAVY.AI's infrastructure. If you're unfamiliar with their offering or want to add HEAVY.AI on to your CV, it's worth getting it up and running.

A Supercomputer in the Cloud

In this post, I'm going to download and enrich four decades of aircraft flight data from the US Federal Aviation Administration (FAA) and the US Bureau of Transportation Statistics (BTS) and conduct some analysis of that data using English-language prompts.

I'll use an AWS g5.12xlarge instance. It costs $5.672 / hour when paid for on-demand.

It's running Ubuntu 22.04.4 LTS and has 48 vCPUs powered by AMD's second-generation EPYC platform. There's 192 GB of RAM and just under 4 TB of NVMe-backed storage on this instance.

The instance hosts 4x Nvidia A10G Tensor Core GPUs which are based on the Ampere Architecture that Nvidia launched in 2020. They have a combined 96 GB of GDDR6 Memory that supports a throughput of 600 GB/s. HEAVY.AI is GPU-accelerated and can take good advantage of these cards.

Below is the output from Nvidia's System Management Interface utility.

$ nvidia-smi

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.14 Driver Version: 550.54.14 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A10G Off | 00000000:00:1B.0 Off | 0 |

| 0% 30C P8 9W / 300W | 0MiB / 23028MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA A10G Off | 00000000:00:1C.0 Off | 0 |

| 0% 37C P8 10W / 300W | 0MiB / 23028MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 2 NVIDIA A10G Off | 00000000:00:1D.0 Off | 0 |

| 0% 36C P8 11W / 300W | 0MiB / 23028MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 3 NVIDIA A10G Off | 00000000:00:1E.0 Off | 0 |

| 0% 37C P8 9W / 300W | 0MiB / 23028MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

HEAVY.AI Up & Running

I'll first install Docker and some CLI utilities.

$ sudo apt update

$ sudo apt install \

csvjson \

docker.io \

docker-compose \

jq

$ newgrp docker

Running GPU-accelerated software on Linux involves a lot of moving parts but HEAVY.AI has simplified setting up an environment with a single install script.

$ cd ~

$ git clone https://github.com/heavyai/heavyai-devstack

$ ~/heavyai-devstack/nvidiaSetup.sh

I'll reboot the system and then install and launch HEAVY.AI.

$ sudo reboot

$ ~/heavyai-devstack/installHeavy.sh

$ docker-compose up -d

I'll install a Python environment and a few packages that will be useful for analysing aviation data in HEAVY.AI's dashboard offering.

$ mkdir -p ~/miniconda3

$ wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh \

-O ~/miniconda3/miniconda.sh

$ bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

$ rm -rf ~/miniconda3/miniconda.sh

$ ~/miniconda3/bin/conda init bash

$ conda update -n base -c defaults conda

$ conda install -c conda-forge mamba

$ mamba create -n flights python=3.10.12

$ mamba init

$ mamba activate flights

$ mamba install -n flights requests

$ mamba install -c conda-forge heavyai

$ mamba install -c conda-forge boto3

The above operating system and software installation footprint is ~36 GB.

Lastly, I'll create a BASH function that will help preview each of the datasets that I'll be importing into HEAVY.AI.

$ function first_record () {

head -n2 $1 \

| sed '1s/^\xEF\xBB\xBF//' \

| sed 's/[[:blank:]]*,/,/g' \

| csvjson \

| jq -S .

}

The FAA Aircraft Registration Dataset

I'll create a folder for the various datasets and derivatives used in this post.

$ mkdir -p ~/bts_flights

$ cd ~/bts_flights

The following will download the FAA's Aircraft Registration Database.

$ aws s3 --no-sign-request \

sync \

s3://batteries-included/flights_demo/ \

flights_demo/

There are four files of interest in the flights_demo folder.

The MASTER.txt file contains the serial numbers, registration addresses, manufacturing details and certifications of all US civilian aircraft. It's 172 MB and contains 293,466 records.

$ first_record MASTER.txt

[

{

" KIT MODEL": null,

"AIR WORTH DATE": 20140325,

"CERT ISSUE DATE": 20211130,

"CERTIFICATION": "1T",

"CITY": "RIDGELAND",

"COUNTRY": "US",

"COUNTY": 89,

"ENG MFR MDL": 52041,

"EXPIRATION DATE": 20281130,

"FRACT OWNER": null,

"KIT MFR": null,

"LAST ACTION DATE": 20230823,

"MFR MDL CODE": 2076811,

"MODE S CODE": 50000001,

"MODE S CODE HEX": "A00001",

"N-NUMBER": true,

"NAME": "TENAX AEROSPACE LLC",

"OTHER NAMES(1)": null,

"OTHER NAMES(2)": null,

"OTHER NAMES(3)": null,

"OTHER NAMES(4)": null,

"OTHER NAMES(5)": null,

"REGION": 2,

"SERIAL NUMBER": "680-0519",

"STATE": "MS",

"STATUS CODE": "V",

"STREET": "400 W PARKWAY PL STE 201",

"STREET2": null,

"TYPE AIRCRAFT": 5,

"TYPE ENGINE": 5,

"TYPE REGISTRANT": 7,

"UNIQUE ID": 1141371,

"YEAR MFR": 2014,

"ZIP CODE": 391576005,

"ii": null

}

]

The ENGINE.txt file contains aircraft engine specifications. It's 224 KB and contains 4,665 records.

$ first_record ENGINE.txt

[

{

"CODE": 0,

"HORSEPOWER": 0,

"MFR": null,

"MODEL": null,

"THRUST": 0,

"TYPE": false,

"g": null

}

]

The ACFTREF.txt file contains aircraft equipment and seating arrangements. It's 14 MB and contains 91,355 records.

$ first_record ACFTREF.txt

[

{

"AC-CAT": true,

"AC-WEIGHT": "CLASS 3",

"BUILD-CERT-IND": false,

"CODE": 20901,

"MFR": "AAR AIRLIFT GROUP INC",

"MODEL": "UH-60A",

"NO-ENG": 2,

"NO-SEATS": 15,

"SPEED": 0,

"TC-DATA-HOLDER": null,

"TC-DATA-SHEET": null,

"TYPE-ACFT": 6,

"TYPE-ENG": 3,

"n": null

}

]

The T_MASTER_CORD.csv file contains a list of airports and airfields in the US. It's 3.7 MB and contains 19,156 records.

$ first_record T_MASTER_CORD.csv

[

{

"AIRPORT": "01A",

"AIRPORT_COUNTRY_CODE_ISO": "US",

"AIRPORT_COUNTRY_NAME": "United States",

"AIRPORT_ID": 10001,

"AIRPORT_IS_CLOSED": false,

"AIRPORT_IS_LATEST": true,

"AIRPORT_SEQ_ID": 1000101,

"AIRPORT_START_DATE": "2007-07-01T00:00:00",

"AIRPORT_STATE_CODE": "AK",

"AIRPORT_STATE_FIPS": 2,

"AIRPORT_STATE_NAME": "Alaska",

"AIRPORT_THRU_DATE": null,

"AIRPORT_WAC": true,

"CITY_MARKET_ID": 30001,

"CITY_MARKET_WAC": true,

"DISPLAY_AIRPORT_CITY_NAME_FULL": "Afognak Lake, AK",

"DISPLAY_AIRPORT_NAME": "Afognak Lake Airport",

"DISPLAY_CITY_MARKET_NAME_FULL": "Afognak Lake, AK",

"LATITUDE": 58.10944444,

"LAT_DEGREES": 58,

"LAT_HEMISPHERE": false,

"LAT_MINUTES": 6,

"LAT_SECONDS": 34,

"LONGITUDE": -152.90666667,

"LON_DEGREES": 152,

"LON_HEMISPHERE": "W",

"LON_MINUTES": 54,

"LON_SECONDS": 24

}

]

The BTS Flight Datasets

I'll download the Bureau of Transportation Statistics' Unique Carrier Codes dataset.

$ wget -o unique_carriers.csv "https://www.transtats.bts.gov/Download_Lookup.asp?Y11x72=Y_haVdhR_PNeeVRef"

It's 52K in size and contains 1,736 records. Here are the first few lines of the CSV.

$ head unique_carriers.csv

Code,Description

"02Q","Titan Airways"

"04Q","Tradewind Aviation"

"05Q","Comlux Aviation, AG"

"06Q","Master Top Linhas Aereas Ltd."

"07Q","Flair Airlines Ltd."

"09Q","Swift Air, LLC d/b/a Eastern Air Lines d/b/a Eastern"

"0BQ","DCA"

"0CQ","ACM AIR CHARTER GmbH"

"0FQ","Maine Aviation Aircraft Charter, LLC"

I'll generate a list of URLs for the Bureau of Transportation Statistics' Ontime Reporting dataset. It contains ~220M flight records broken up into one ZIP'ed CSV file for each month since late 1987.

$ python3

prefix = 'https://transtats.bts.gov/PREZIP'

naming_template = 'On_Time_Reporting_Carrier_On_Time_Performance' \

'_1987_present_%04d_%d.zip'

filenames = []

for year in range(1988, 2024):

for month in range(1, 13):

filenames.append(naming_template % (year, month))

filenames.append(naming_template % (1987, 10)))

filenames.append(naming_template % (1987, 11)))

filenames.append(naming_template % (1987, 12)))

filenames.append(naming_template % (2024, 1)))

open('manifest.txt', 'w')\

.write('\n'.join('wget -c "%s/%s"' % (prefix, uri)

for uri in filenames))

I'll then download the files using wget.

$ bash -x manifest.txt

I'll decompress the files and convert them from Latin-1 encoding to UTF-8.

$ for FILENAME in *.zip; do

unzip -j $FILENAME '*.csv'

done

$ for FILENAME in *.csv;

iconv -f latin1 -t utf-8 $FILENAME > temp

mv temp $FILENAME

done

Each ZIP file contains a CSV file that is ~275 MB when decompressed and has around 650K records. Below is an example record.

$ first_record On_Time_Reporting_Carrier_On_Time_Performance_\(1987_present\)_2007_3.csv

[

{

"ActualElapsedTime": 119,

"AirTime": 105,

"ArrDel15": 0,

"ArrDelay": -1,

"ArrDelayMinutes": 0,

"ArrTime": 1416,

"ArrTimeBlk": "1400-1459",

"ArrivalDelayGroups": -1,

"CRSArrTime": 1417,

"CRSDepTime": 1320,

"CRSElapsedTime": 117,

"CancellationCode": null,

"Cancelled": 0,

"CarrierDelay": null,

"DOT_ID_Reporting_Airline": 19977,

"DayOfWeek": 2,

"DayofMonth": 27,

"DepDel15": 0,

"DepDelay": -3,

"DepDelayMinutes": 0,

"DepTime": 1317,

"DepTimeBlk": "1300-1359",

"DepartureDelayGroups": -1,

"Dest": "TUS",

"DestAirportID": 15376,

"DestAirportSeqID": 1537601,

"DestCityMarketID": 30436,

"DestCityName": "Tucson, AZ",

"DestState": "AZ",

"DestStateFips": 4,

"DestStateName": "Arizona",

"DestWac": 81,

"Distance": 639,

"DistanceGroup": 3,

"Div1Airport": null,

"Div1AirportID": null,

"Div1AirportSeqID": null,

"Div1LongestGTime": null,

"Div1TailNum": null,

"Div1TotalGTime": null,

"Div1WheelsOff": null,

"Div1WheelsOn": null,

"Div2Airport": null,

"Div2AirportID": null,

"Div2AirportSeqID": null,

"Div2LongestGTime": null,

"Div2TailNum": null,

"Div2TotalGTime": null,

"Div2WheelsOff": null,

"Div2WheelsOn": null,

"Div3Airport": null,

"Div3AirportID": null,

"Div3AirportSeqID": null,

"Div3LongestGTime": null,

"Div3TailNum": null,

"Div3TotalGTime": null,

"Div3WheelsOff": null,

"Div3WheelsOn": null,

"Div4Airport": null,

"Div4AirportID": null,

"Div4AirportSeqID": null,

"Div4LongestGTime": null,

"Div4TailNum": null,

"Div4TotalGTime": null,

"Div4WheelsOff": null,

"Div4WheelsOn": null,

"Div5Airport": null,

"Div5AirportID": null,

"Div5AirportSeqID": null,

"Div5LongestGTime": null,

"Div5TailNum": null,

"Div5TotalGTime": null,

"Div5WheelsOff": null,

"Div5WheelsOn": null,

"DivActualElapsedTime": null,

"DivAirportLandings": null,

"DivArrDelay": null,

"DivDistance": null,

"DivReachedDest": null,

"Diverted": 0,

"FirstDepTime": null,

"FlightDate": "2007-03-27",

"Flight_Number_Reporting_Airline": 293,

"Flights": 1,

"IATA_CODE_Reporting_Airline": "UA",

"LateAircraftDelay": null,

"LongestAddGTime": null,

"Month": 3,

"NASDelay": null,

"Origin": "DEN",

"OriginAirportID": 11292,

"OriginAirportSeqID": 1129202,

"OriginCityMarketID": 30325,

"OriginCityName": "Denver, CO",

"OriginState": "CO",

"OriginStateFips": 8,

"OriginStateName": "Colorado",

"OriginWac": 82,

"Quarter": true,

"Reporting_Airline": "UA",

"SecurityDelay": null,

"Tail_Number": "N942UA",

"TaxiIn": 5,

"TaxiOut": 9,

"TotalAddGTime": null,

"WeatherDelay": null,

"WheelsOff": 1326,

"WheelsOn": 1411,

"Year": 2007,

"fffff": null

}

]

Extracting Columns of Interest

I'll run a Python script to extract the fields of interest from each of the CSVs in the Ontime dataset.

$ python3

import csv

from glob import glob

cols_keys = [

'FlightDate',

'Reporting_Airline',

'Tail_Number',

'Flight_Number_Reporting_Airline',

'OriginAirportID',

'DestAirportID',

'CRSDepTime',

'DepTime',

'DepDelay',

'TaxiOut',

'WheelsOff',

'WheelsOn',

'TaxiIn',

'CRSArrTime',

'ArrTime',

'ArrDelay',

'Cancelled',

'CancellationCode',

'Diverted',

'CRSElapsedTime',

'ActualElapsedTime',

'AirTime',

'Distance',

'CarrierDelay',

'WeatherDelay',

'NASDelay',

'SecurityDelay',

'LateAircraftDelay']

for filename in glob('On_Time_Reporting*.csv'):

new_filename = 'renamed_%s' % filename.split("present)_")[-1]

with open(filename, newline='') as csv_file:

reader = csv.DictReader(csv_file)

new_fieldnames = [name

for name in reader.fieldnames

if name in cols_keys]

with open(new_filename, 'w', newline='') as f:

writer = csv.DictWriter(f, fieldnames=new_fieldnames)

writer.writeheader()

for row in reader:

writer.writerow({key: value

for key, value in row.items()

if key in cols_keys})

The resulting CSVs are around ~85 MB each. Here is the first record of one of the resulting files.

$ first_record renamed_2007_3.csv

[

{

"ActualElapsedTime": 119,

"AirTime": 105,

"ArrDelay": -1,

"ArrTime": 1416,

"CRSArrTime": 1417,

"CRSDepTime": 1320,

"CRSElapsedTime": 117,

"CancellationCode": null,

"Cancelled": 0,

"CarrierDelay": null,

"DepDelay": -3,

"DepTime": 1317,

"DestAirportID": 15376,

"Distance": 639,

"Diverted": 0,

"FlightDate": "2007-03-27",

"Flight_Number_Reporting_Airline": 293,

"LateAircraftDelay": null,

"NASDelay": null,

"OriginAirportID": 11292,

"Reporting_Airline": "UA",

"SecurityDelay": null,

"Tail_Number": "N942UA",

"TaxiIn": 5,

"TaxiOut": 9,

"WeatherDelay": null,

"WheelsOff": 1326,

"WheelsOn": 1411

}

]

Loading Flights into HEAVY.AI

I'll create a flights database that will hold all the tables in this post.

$ bin/heavysql -u admin -p HyperInteractive

CREATE DATABASE flights;

I'll then load in the FAA's Carriers dataset.

$ bin/heavysql -u admin -p HyperInteractive -db flights

CREATE TABLE IF NOT EXISTS unique_carriers (

Code TEXT ENCODING DICT(16),

Description TEXT ENCODING DICT(16));

COPY unique_carriers

FROM 'unique_carriers.csv'

WITH (DELIMITER = ',');

I'll then load in the Ontime dataset.

CREATE TABLE IF NOT EXISTS flights (

FlightDate DATE ENCODING DAYS(32),

Reporting_Airline TEXT ENCODING DICT(8),

Tail_Number TEXT ENCODING DICT(32),

Flight_Number_Reporting_Airline TEXT ENCODING DICT(16),

OriginAirportID SMALLINT,

DestAirportID SMALLINT,

CRSDepTime SMALLINT,

DepTime SMALLINT,

DepDelay SMALLINT,

TaxiOut SMALLINT,

WheelsOff SMALLINT,

WheelsOn SMALLINT,

TaxiIn SMALLINT,

CRSArrTime SMALLINT,

ArrTime SMALLINT,

ArrDelay SMALLINT,

Cancelled SMALLINT,

CancellationCode TEXT ENCODING DICT(8),

Diverted SMALLINT,

CRSElapsedTime SMALLINT,

ActualElapsedTime SMALLINT,

AirTime SMALLINT,

Distance SMALLINT,

CarrierDelay SMALLINT,

WeatherDelay SMALLINT,

NASDelay SMALLINT,

SecurityDelay SMALLINT,

LateAircraftDelay SMALLINT);

$ for FILENAME in renamed*.csv; do

echo "COPY flights

FROM '$FILENAME'

WITH (DELIMITER=',',

THREADS=30)" \

| bin/heavysql -u admin -p HyperInteractive -db flights

done

The above loaded in 3.5 hours and took up 447 GB in HEAVY.AI's internal storage format.

Flight Data Enrichment

Below I'll add carrier details to each flight.

ALTER TABLE flights

ADD COLUMN carrier_name_full TEXT ENCODING DICT(16);

ALTER TABLE flights

ADD COLUMN carrier_name TEXT ENCODING DICT(16);

UPDATE flights

SET carrier_name_full = (select Description

FROM (SELECT T2.RowID,

T1.Description

FROM unique_carriers AS T1

JOIN flights AS T2 ON T1.Code = T2.Reporting_Airline) tb1

WHERE tb1.RowID = flights.RowID);

UPDATE flights

SET carrier_name = (

SELECT name

FROM (

SELECT RowId,

REPLACE(

REPLACE(

REPLACE(

REPLACE(

REPLACE(

REPLACE(

REPLACE(carrier_name_full, ' Inc.', ''),

' Co.',

''

),

' LLC',

''

),

' d/b/a ATA',

''

),

' d/b/a aha!',

''

),

' (Merged with US Airways 9/05. Stopped reporting 10/07.)',

''

),

' Corporation',

''

) as name

FROM flights

) tb1

WHERE tb1.RowID = flights.RowID);

DROP TABLE unique_carriers;

Below I've run some SQL to clean up the timestamps.

ALTER TABLE flights

ADD COLUMN crs_arr_timestamp TIMESTAMP(0) ENCODING FIXED(32);

UPDATE flights

SET CRS_arr_timestamp =

CASE

WHEN crsarrtime = 0 AND crsdeptime = 0 THEN NULL

WHEN crsarrtime > crsdeptime THEN DATEADD(

MINUTE,

crsarrtime % 100,

DATEADD(

HOUR,

crsarrtime / 100,

DATE_TRUNC(DAY, FlightDate))

)

WHEN crsarrtime <= crsdeptime THEN DATEADD(

MINUTE,

crsarrtime % 100,

DATEADD(

DAY,

1,

DATEADD(

HOUR,

crsarrtime / 100,

DATE_TRUNC(DAY, FlightDate))

)

)

END;

ALTER TABLE flights

ADD COLUMN actual_arr_timestamp TIMESTAMP(0) ENCODING FIXED(32);

UPDATE flights

SET actual_arr_timestamp =

CASE

WHEN arrtime = 0 AND deptime = 0 THEN NULL

WHEN arrtime > deptime THEN DATEADD(

MINUTE,

arrtime % 100,

DATEADD(

HOUR,

arrtime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

WHEN arrtime <= deptime THEN DATEADD(

MINUTE,

arrtime % 100,

DATEADD(

DAY,

1,

DATEADD(

HOUR,

arrtime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

)

END;

ALTER TABLE flights

ADD COLUMN actual_dep_timestamp TIMESTAMP(0) ENCODING FIXED(32);

UPDATE flights

SET actual_dep_timestamp =

CASE

WHEN arrtime = 0 AND deptime = 0 THEN NULL

WHEN arrtime > deptime THEN DATEADD(

MINUTE,

deptime % 100,

DATEADD(

HOUR,

deptime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

WHEN arrtime <= deptime THEN DATEADD(

MINUTE,

deptime % 100,

DATEADD(

DAY,

1,

DATEADD(

HOUR,

deptime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

)

END;

ALTER TABLE flights

ADD COLUMN crs_dep_timestamp TIMESTAMP(0) ENCODING FIXED(32);

UPDATE flights

SET crs_dep_timestamp =

CASE

WHEN arrtime = 0 AND deptime = 0 THEN NULL

WHEN arrtime > deptime THEN DATEADD(

MINUTE,

crsdeptime % 100,

DATEADD(

HOUR,

crsdeptime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

WHEN arrtime <= deptime THEN DATEADD(

MINUTE,

crsdeptime % 100,

DATEADD(

DAY,

1,

DATEADD(

HOUR,

crsdeptime / 100,

DATE_TRUNC(DAY, FlightDate)

)

)

)

END;

Below I've cleared away a few of the now unused columns and cleaned up the naming convention on a few others.

ALTER TABLE flights DROP COLUMN FlightDate;

ALTER TABLE flights RENAME COLUMN Reporting_Airline TO unique_carrier;

ALTER TABLE flights RENAME COLUMN Flight_Number_Reporting_Airline TO flight_num;

ALTER TABLE flights RENAME COLUMN Tail_Number TO tail_num;

ALTER TABLE flights RENAME COLUMN OriginAirportID TO origin_airport_id;

ALTER TABLE flights RENAME COLUMN DestAirportID TO dest_airport_id;

ALTER TABLE flights DROP COLUMN CRSDepTime;

ALTER TABLE flights DROP COLUMN CRSArrTime;

ALTER TABLE flights DROP COLUMN DepTime;

ALTER TABLE flights DROP COLUMN ArrTime;

ALTER TABLE flights RENAME COLUMN CRSElapsedTime TO crs_actual_elapsed_time;

ALTER TABLE flights RENAME COLUMN ActualElapsedTime TO actual_elapsed_time;

ALTER TABLE flights RENAME COLUMN AirTime TO air_time;

ALTER TABLE flights RENAME COLUMN ArrDelay TO arr_delay;

ALTER TABLE flights RENAME COLUMN DepDelay TO dep_delay;

ALTER TABLE flights RENAME COLUMN TaxiIn TO taxi_in;

ALTER TABLE flights RENAME COLUMN TaxiOut TO taxi_out;

ALTER TABLE flights RENAME COLUMN WheelsOn TO wheels_on;

ALTER TABLE flights RENAME COLUMN WheelsOff TO wheels_off;

ALTER TABLE flights RENAME COLUMN CancellationCode TO cancellation_code;

ALTER TABLE flights RENAME COLUMN CarrierDelay TO carrier_delay;

ALTER TABLE flights RENAME COLUMN WeatherDelay TO weather_delay;

ALTER TABLE flights RENAME COLUMN NASDelay TO nas_delay;

ALTER TABLE flights RENAME COLUMN SecurityDelay TO security_delay;

ALTER TABLE flights RENAME COLUMN LateAircraftDelay TO late_aircraft_delay;

I'll add some descriptions to a few of the columns.

COMMENT ON COLUMN flights.origin_airport_id IS 'Airport ID for the flights departure airport. Joins as a foreign key to the primary key in airports.airport_id';

COMMENT ON COLUMN flights.dest_airport_id IS 'Airport ID for the flights destination airport. Joins as a foreign key to the primary key in airports.airport_id';

COMMENT ON COLUMN flights.air_time IS 'Time in minutes the aircraft was in the air for this flight';

COMMENT ON COLUMN flights.cancellation_code IS 'Code explaining reason for flight cancellation: A - carrier caused B - weather C - National Aviation System D - Security';

Loading the FAA Tables into HEAVY.AI

Below I'll load three of the feeds from the FAA into HEAVY.AI.

$ bin/heavysql -u admin -p HyperInteractive -db flights

CREATE TABLE IF NOT EXISTS ACFTREF (

CODE TEXT ENCODING DICT(32),

MFR TEXT ENCODING DICT(32),

MODEL TEXT ENCODING DICT(32),

TYPEACFT TEXT ENCODING DICT(32),

TYPEENG SMALLINT,

ACCAT SMALLINT,

BUILDCERTIND SMALLINT,

NOENG SMALLINT,

NOSEATS SMALLINT,

ACWEIGHT TEXT ENCODING DICT(32),

SPEED SMALLINT,

TCDATASHEET TEXT ENCODING DICT(32),

TCDATAHOLDER TEXT ENCODING DICT(32));

CREATE TABLE IF NOT EXISTS ENGINE (

CODE INTEGER,

MFR TEXT ENCODING DICT(32),

MODEL TEXT ENCODING DICT(32),

TYPE SMALLINT,

HORSEPOWER SMALLINT,

THRUST INTEGER);

CREATE TABLE IF NOT EXISTS MASTER (

NNUMBER TEXT ENCODING DICT(32),

SERIALNUMBER TEXT ENCODING DICT(32),

MFRMDLCODE TEXT ENCODING DICT(32),

ENGMFRMDL INTEGER,

YEARMFR SMALLINT,

TYPEREGISTRANT SMALLINT,

NAME TEXT ENCODING DICT(32),

STREET TEXT ENCODING DICT(32),

STREET2 TEXT ENCODING DICT(32),

CITY TEXT ENCODING DICT(32),

STATE TEXT ENCODING DICT(32),

ZIPCODE TEXT ENCODING DICT(32),

REGION TEXT ENCODING DICT(32),

COUNTY SMALLINT,

COUNTRY TEXT ENCODING DICT(32),

LASTACTIONDATE TEXT ENCODING NONE,

CERTISSUEDATE TEXT ENCODING NONE,

CERTIFICATION TEXT ENCODING DICT(32),

TYPEAIRCRAFT TEXT ENCODING DICT(32),

TYPEENGINE SMALLINT,

STATUSCODE TEXT ENCODING DICT(32),

MODESCODE INTEGER,

FRACTOWNER TEXT ENCODING DICT(32),

AIRWORTHDATE TEXT ENCODING NONE,

OTHERNAMES1 TEXT ENCODING DICT(32),

OTHERNAMES2 TEXT ENCODING DICT(32),

OTHERNAMES3 TEXT ENCODING DICT(32),

OTHERNAMES4 TEXT ENCODING DICT(32),

OTHERNAMES5 TEXT ENCODING DICT(32),

EXPIRATIONDATE TEXT ENCODING NONE,

UNIQUEID INTEGER,

KITMFR TEXT ENCODING DICT(32),

KITMODEL TEXT ENCODING DICT(32),

MODESCODEHEX TEXT ENCODING DICT(32));

COPY ACFTREF

FROM 'flights_demo/ACFTREF.txt'

WITH (DELIMITER = ',');

COPY ENGINE

FROM 'flights_demo/ENGINE.txt'

WITH (DELIMITER = ',');

COPY MASTER

FROM 'flights_demo/MASTER.txt'

WITH (DELIMITER = ',');

Enriching the FAA's Datasets

Below I'll run some transformations on the FAA data to get it into a workable state.

$ bin/heavysql -u admin -p HyperInteractive -db flights

UPDATE MASTER set NNUMBER = 'N' || NNUMBER;

ALTER TABLE MASTER ADD COLUMN new_CERTISSUEDATE DATE;

UPDATE MASTER

SET new_CERTISSUEDATE =

TRY_CAST(SUBSTRING(CERTISSUEDATE FROM 1 FOR 4) || '-' ||

SUBSTRING(CERTISSUEDATE FROM 5 FOR 2) || '-' ||

SUBSTRING(CERTISSUEDATE FROM 7 FOR 2) AS DATE);

ALTER TABLE MASTER DROP COLUMN CERTISSUEDATE;

ALTER TABLE MASTER RENAME COLUMN new_CERTISSUEDATE TO CERTISSUEDATE;

ALTER TABLE MASTER ADD COLUMN new_AIRWORTHDATE DATE;

UPDATE MASTER

SET new_AIRWORTHDATE =

TRY_CAST(SUBSTRING(AIRWORTHDATE FROM 1 FOR 4) || '-' ||

SUBSTRING(AIRWORTHDATE FROM 5 FOR 2) || '-' ||

SUBSTRING(AIRWORTHDATE FROM 7 FOR 2) AS DATE);

ALTER TABLE MASTER DROP COLUMN AIRWORTHDATE;

ALTER TABLE MASTER RENAME COLUMN new_AIRWORTHDATE TO AIRWORTHDATE;

ALTER TABLE MASTER ADD COLUMN new_LASTACTIONDATE DATE;

UPDATE MASTER

SET new_LASTACTIONDATE =

TRY_CAST(SUBSTRING(LASTACTIONDATE FROM 1 FOR 4) || '-' ||

SUBSTRING(LASTACTIONDATE FROM 5 FOR 2) || '-' ||

SUBSTRING(LASTACTIONDATE FROM 7 FOR 2) AS DATE);

ALTER TABLE MASTER DROP COLUMN LASTACTIONDATE;

ALTER TABLE MASTER RENAME COLUMN new_LASTACTIONDATE TO LASTACTIONDATE;

ALTER TABLE MASTER ADD COLUMN new_EXPIRATIONDATE DATE;

UPDATE MASTER

SET new_EXPIRATIONDATE =

TRY_CAST(SUBSTRING(EXPIRATIONDATE FROM 1 FOR 4) || '-' ||

SUBSTRING(EXPIRATIONDATE FROM 5 FOR 2) || '-' ||

SUBSTRING(EXPIRATIONDATE FROM 7 FOR 2) AS DATE);

ALTER TABLE MASTER DROP COLUMN EXPIRATIONDATE;

ALTER TABLE MASTER RENAME COLUMN new_EXPIRATIONDATE TO EXPIRATIONDATE;

I'll then use that data to build a more usable aircraft table.

CREATE TABLE aircraft AS (

SELECT

T1.NNUMBER AS tail_num,

T1.SERIALNUMBER AS serial_num,

T1.YEARMFR AS aircraft_year,

T1.NAME AS registration_name,

T1.CERTIFICATION AS certification,

T1.CERTISSUEDATE AS certification_issue_date,

T1.AIRWORTHDATE AS airworth_date,

CASE WHEN T3.ACCAT = '1' THEN 'Land'

WHEN T3.ACCAT = '2' THEN 'Sea'

WHEN T3.ACCAT = '3' THEN 'Amphibian'

END AS aircraft_category,

CASE WHEN T1.TYPEAIRCRAFT = '1' THEN 'Glider'

WHEN T1.TYPEAIRCRAFT = '2' THEN 'Balloon'

WHEN T1.TYPEAIRCRAFT = '3' THEN 'Blimp/Dirigible'

WHEN T1.TYPEAIRCRAFT = '4' THEN 'Fixed wing single engine'

WHEN T1.TYPEAIRCRAFT = '5' THEN 'Fixed wing multi engine'

WHEN T1.TYPEAIRCRAFT = '6' THEN 'Rotorcraft'

WHEN T1.TYPEAIRCRAFT = '7' THEN 'Weight-shift-control'

WHEN T1.TYPEAIRCRAFT = '8' THEN 'Powered Parachute'

WHEN T1.TYPEAIRCRAFT = '9' THEN 'Gyroplane'

WHEN T1.TYPEAIRCRAFT = 'H' THEN 'Hybrid Lift'

WHEN T1.TYPEAIRCRAFT = 'O' THEN 'Other'

END AS aircraft_type,

T3.MFR AS aircraft_manufacturer_name,

T3.MODEL AS aircraft_model_name,

T3.NOSEATS AS aircraft_number_seats,

CASE WHEN T3.ACWEIGHT = 'Class 1' THEN 'Up to 12,499'

WHEN T3.ACWEIGHT = 'Class 2' THEN '12,500 - 19,999'

WHEN T3.ACWEIGHT = 'Class 3' THEN '20,000 and over'

WHEN T3.ACWEIGHT = 'Class 4' THEN 'UAV up to 55'

END AS aircraft_weight,

T3.SPEED AS aircraft_max_speed_mph,

CASE WHEN T1.TYPEENGINE = 0 THEN 'None'

WHEN T1.TYPEENGINE = 1 THEN 'Reciprocating'

WHEN T1.TYPEENGINE = 2 THEN 'Turbo-prop'

WHEN T1.TYPEENGINE = 3 THEN 'Turbo-shaft'

WHEN T1.TYPEENGINE = 4 THEN 'Turbo-jet'

WHEN T1.TYPEENGINE = 5 THEN 'Turbo-fan'

WHEN T1.TYPEENGINE = 6 THEN 'Ramjet'

WHEN T1.TYPEENGINE = 7 THEN '2 Cycle'

WHEN T1.TYPEENGINE = 8 THEN '4 Cycle'

WHEN T1.TYPEENGINE = 9 THEN 'Unknown'

WHEN T1.TYPEENGINE = 10 THEN 'Electric'

WHEN T1.TYPEENGINE = 11 THEN 'Rotary'

END AS aircraft_engine_type,

T2.MFR AS aircraft_engine_manufacturer,

T2.MODEL AS aircraft_engine_model_name,

T2.HORSEPOWER AS aircraft_engine_horsepower,

T2.THRUST AS aircraft_engine_thrust,

T3.NOENG AS aircraft_engine_num,

CASE WHEN T1.STATUSCODE = 'A' THEN 'The Triennial Aircraft Registration form was mailed and has not been returned by the Post Office'

WHEN T1.STATUSCODE = 'D' THEN 'Expired Dealer'

WHEN T1.STATUSCODE = 'E' THEN 'The Certificate of Aircraft Registration was revoked by enforcement action'

WHEN T1.STATUSCODE = 'M' THEN 'Aircraft registered to the manufacturer under their Dealer Certificate'

WHEN T1.STATUSCODE = 'N' THEN 'Non-citizen Corporations which have not returned their flight hour reports'

WHEN T1.STATUSCODE = 'R' THEN 'Registration pending'

WHEN T1.STATUSCODE = 'S' THEN 'Second Triennial Aircraft Registration Form has been mailed and has not been returned by the Post Office'

WHEN T1.STATUSCODE = 'T' THEN 'Valid Registration from a Trainee'

WHEN T1.STATUSCODE = 'V' THEN 'Valid Registration'

WHEN T1.STATUSCODE = 'W' THEN 'Certificate of Registration has been deemed Ineffective or Invalid'

WHEN T1.STATUSCODE = 'X' THEN 'Enforcement Letter'

WHEN T1.STATUSCODE = 'Z' THEN 'Permanent Reserved'

WHEN T1.STATUSCODE = '1' THEN 'Triennial Aircraft Registration form was returned by the Post Office as undeliverable'

WHEN T1.STATUSCODE = '2' THEN 'N-Number Assigned – but has not yet been registered'

WHEN T1.STATUSCODE = '3' THEN 'N-Number assigned as a Non Type Certificated aircraft - but has not yet been registered'

WHEN T1.STATUSCODE = '4' THEN 'N-Number assigned as import - but has not yet been registered'

WHEN T1.STATUSCODE = '5' THEN 'Reserved N-Number'

WHEN T1.STATUSCODE = '6' THEN 'Administratively cancelled'

WHEN T1.STATUSCODE = '7' THEN 'Sale reported'

WHEN T1.STATUSCODE = '8' THEN 'A second attempt has been made at mailing a Triennial Aircraft Registration form to the owner with no response'

WHEN T1.STATUSCODE = '9' THEN 'Certificate of Registration has been revoked'

WHEN T1.STATUSCODE = '10' THEN 'N-Number assigned, has not been registered and is pending cancellation'

WHEN T1.STATUSCODE = '11' THEN 'N-Number assigned as a Non Type Certificated (Amateur) but has not been registered that is pending cancellation'

WHEN T1.STATUSCODE = '12' THEN 'N-Number assigned as import but has not been registered that is pending cancellation'

WHEN T1.STATUSCODE = '13' THEN 'Registration Expired'

WHEN T1.STATUSCODE = '14' THEN 'First Notice for ReRegistration/Renewal'

WHEN T1.STATUSCODE = '15' THEN 'Second Notice for ReRegistration/Renewal'

WHEN T1.STATUSCODE = '16' THEN 'Registration Expired – Pending Cancellation'

WHEN T1.STATUSCODE = '17' THEN 'Sale Reported – Pending Cancellation'

WHEN T1.STATUSCODE = '18' THEN 'Sale Reported – Cancelled'

WHEN T1.STATUSCODE = '19' THEN 'Registration Pending – Pending Cancellation'

WHEN T1.STATUSCODE = '20' THEN 'Registration Pending – Cancelled'

WHEN T1.STATUSCODE = '21' THEN 'Revoked – Pending Cancellation'

WHEN T1.STATUSCODE = '22' THEN 'Revoked - Cancelled'

WHEN T1.STATUSCODE = '23' THEN 'Expired Dealer (Pending Cancellation)'

WHEN T1.STATUSCODE = '24' THEN 'Third Notice for ReRegistration/Renewal'

WHEN T1.STATUSCODE = '25' THEN 'First Notice for Registration Renewal'

WHEN T1.STATUSCODE = '26' THEN 'Second Notice for Registration Renewal'

WHEN T1.STATUSCODE = '27' THEN 'Registration Expired'

WHEN T1.STATUSCODE = '28' THEN 'Third Notice for Registration Renewal'

WHEN T1.STATUSCODE = '29' THEN 'Registration Expired – Pending Cancellation'

END AS registration_status,

T1.EXPIRATIONDATE AS expiration_date,

T1.MODESCODE AS mode_s_code,

T1.UNIQUEID AS uuid

FROM MASTER T1

LEFT JOIN ENGINE T2 ON T1.ENGMFRMDL = T2.CODE

LEFT JOIN ACFTREF T3 ON T1.MFRMDLCODE = T3.CODE);

DROP TABLE MASTER;

DROP TABLE ENGINE;

DROP TABLE ACFTREF;

I'll then take that table and filter it down to the aircraft that are used in the Ontime dataset.

CREATE TABLE aircraft_used AS

SELECT *

FROM aircraft

WHERE tail_Num IN (

SELECT DISTINCT tail_num

FROM flights)

WITH (use_shared_dictionaries='false');

DROP TABLE aircraft;

ALTER TABLE aircraft_used RENAME TO aircraft;

Enriched Airport Data

Below I'll import the FAA's Airport data in.

$ bin/heavysql -u admin -p HyperInteractive -db flights

CREATE TABLE IF NOT EXISTS T_MASTER_CORD (

AIRPORT_SEQ_ID INT,

AIRPORT_ID INT,

AIRPORT TEXT ENCODING DICT(32),

DISPLAY_AIRPORT_NAME TEXT ENCODING DICT(32),

DISPLAY_AIRPORT_CITY_NAME_FULL TEXT ENCODING DICT(32),

AIRPORT_WAC INT,

AIRPORT_COUNTRY_NAME TEXT ENCODING DICT(32),

AIRPORT_COUNTRY_CODE_ISO TEXT ENCODING DICT(8),

AIRPORT_STATE_NAME TEXT ENCODING DICT(32),

AIRPORT_STATE_CODE TEXT ENCODING DICT(8),

AIRPORT_STATE_FIPS SMALLINT,

CITY_MARKET_ID INT,

DISPLAY_CITY_MARKET_NAME_FULL TEXT ENCODING DICT(32),

CITY_MARKET_WAC INT,

LAT_DEGREES INT,

LAT_HEMISPHERE TEXT ENCODING DICT(8),

LAT_MINUTES INT,

LAT_SECONDS INT,

LATITUDE FLOAT,

LON_DEGREES INT,

LON_HEMISPHERE TEXT ENCODING DICT(8),

LON_MINUTES INT,

LON_SECONDS INT,

LONGITUDE FLOAT,

AIRPORT_START_DATE TEXT ENCODING DICT(32),

AIRPORT_THRU_DATE TEXT ENCODING DICT(32),

AIRPORT_IS_CLOSED INT);

COPY T_MASTER_CORD

FROM 'flights_demo/T_MASTER_CORD.csv'

WITH (DELIMITER = ',');

Below I'll create an enriched airports dataset using the FAA table as the source.

CREATE TABLE IF NOT EXISTS airports (

airport_id SMALLINT,

airport TEXT ENCODING DICT(32),

display_airport_name TEXT ENCODING DICT(32),

display_airport_city_name_full TEXT ENCODING DICT(32),

airport_wac_seq_id INTEGER,

airport_wac SMALLINT,

airport_country_name TEXT,

airport_country_code_iso TEXT ENCODING DICT(32),

airport_state_name TEXT ENCODING DICT(32),

airport_state_code TEXT ENCODING DICT(32),

airport_state_fips SMALLINT,

city_market_id INTEGER,

display_city_market_name_full TEXT ENCODING DICT(32),

city_market_wac SMALLINT,

latitude FLOAT,

longitude FLOAT,

airport_start_date DATE,

airport_thru_date DATE,

airport_is_closed SMALLINT);

INSERT INTO airports

SELECT airport_id,

airport,

display_airport_name,

display_airport_city_name_full,

airport_wac_seq_id,

airport_wac,

airport_country_name,

airport_country_code_iso,

airport_state_name,

airport_state_code,

airport_state_fips,

city_market_id,

display_city_market_name_full,

city_market_wac,

latitude,

longitude,

airport_start_date,

airport_thru_date,

airport_is_closed

FROM (

SELECT AIRPORT_ID AS airport_id,

AIRPORT AS airport,

DISPLAY_AIRPORT_NAME AS display_airport_name,

DISPLAY_AIRPORT_CITY_NAME_FULL AS display_airport_city_name_full,

AIRPORT_SEQ_ID AS airport_wac_seq_id,

AIRPORT_WAC AS airport_wac,

AIRPORT_COUNTRY_NAME AS airport_country_name,

AIRPORT_COUNTRY_CODE_ISO AS airport_country_code_iso,

AIRPORT_STATE_NAME AS airport_state_name,

AIRPORT_STATE_CODE AS airport_state_code,

AIRPORT_STATE_FIPS AS airport_state_fips,

CITY_MARKET_ID AS city_market_id,

DISPLAY_CITY_MARKET_NAME_FULL AS display_city_market_name_full,

CITY_MARKET_WAC AS city_market_wac,

LATITUDE AS latitude,

LONGITUDE AS longitude,

TRY_CAST(AIRPORT_START_DATE AS DATE) AS airport_start_date,

TRY_CAST(AIRPORT_THRU_DATE AS DATE) AS airport_thru_date,

AIRPORT_IS_CLOSED AS airport_is_closed,

ROW_NUMBER() OVER (

PARTITION BY AIRPORT_ID

ORDER BY

AIRPORT_ID) AS rn

FROM T_MASTER_CORD

WHERE AIRPORT_ID IN (

SELECT DISTINCT origin_airport_id

FROM flights

)

OR AIRPORT_ID IN (

SELECT DISTINCT dest_airport_id

FROM flights

)

)

WHERE rn = 1;

COMMENT ON COLUMN airports.airport IS 'A three character alpha-numeric code issued by the U.S. Department of Transportation which is the official designation of the airport. The airport code is not always unique to a specific airport because airport codes can change or can be reused.';

DROP TABLE T_MASTER_CORD;

Querying HeavyIQ

I'll connect to the AWS instance using SSH and set up a tunnel so that TCP port 8001 is exposed locally on my machine and all traffic between my machine and the instance is encrypted via SSH.

$ ssh -R 8001:127.0.0.1:8001 3.x.x.x

I'll then open http://127.0.0.1:8001/flights/sql-notebook in a browser.

HEAVY.AI comes with a full suite of notebook and dashboarding tools in its web interface. This creates a one-stop-shop for doing data science and analysis on large datasets while getting the incredible compute performance of Nvidia's GPUs. Below is an example of a dashboard built using their UI.

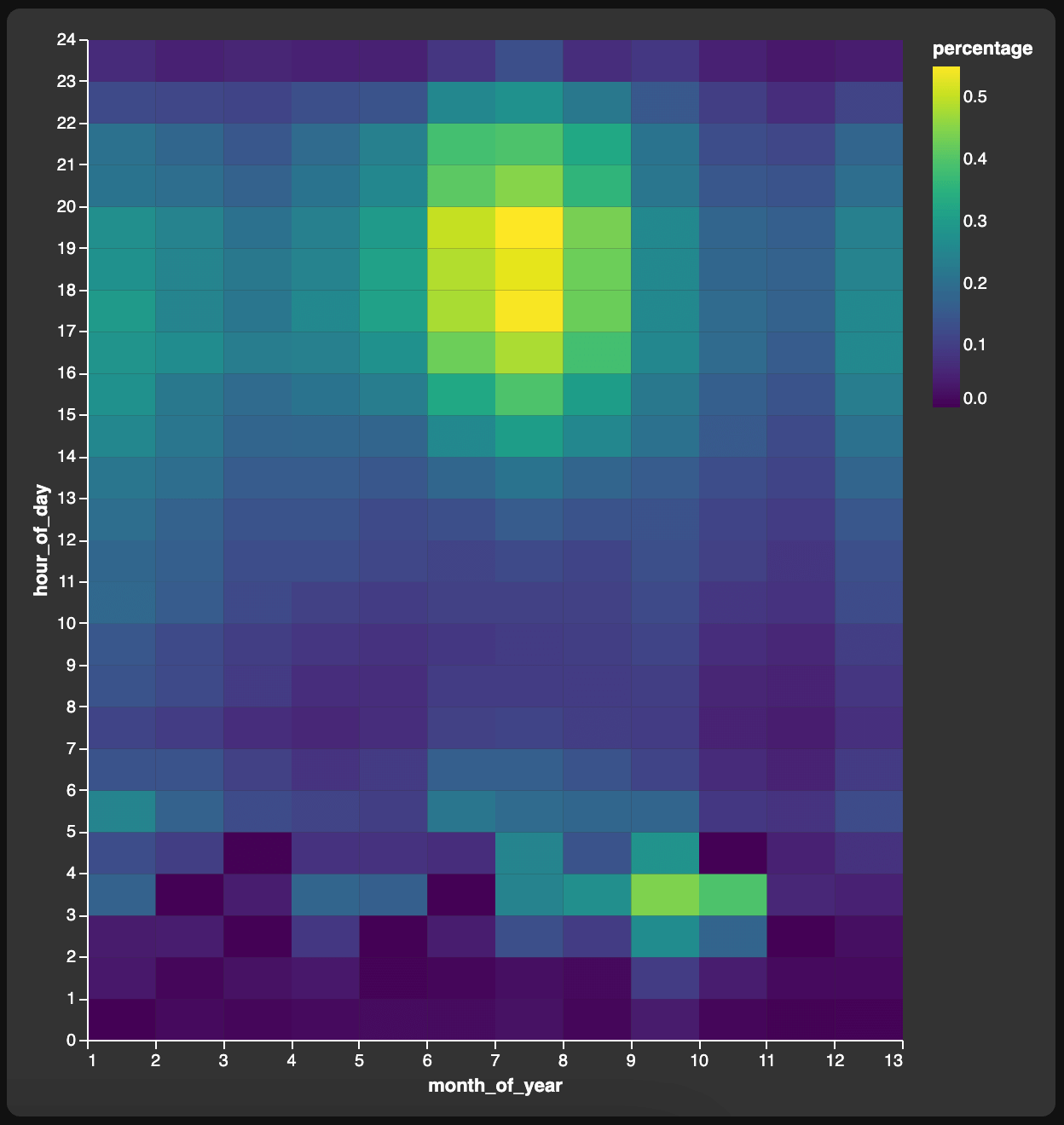

I'll type in the following prompt:

Show the percentage of flights canceled for weather

related reasons by month of year and hour of day

HeavyIQ then generated the following SQL and executed it against HeavyDB.

SELECT

EXTRACT(

MONTH

FROM

crs_dep_timestamp

) AS month_of_year,

EXTRACT(

HOUR

FROM

crs_dep_timestamp

) AS hour_of_day,

AVG(

CASE

WHEN cancellation_code = 'C' THEN 100.0

ELSE 0.0

END

) AS percentage

FROM

flights

WHERE

crs_dep_timestamp IS NOT NULL

GROUP BY

month_of_year,

hour_of_day

ORDER BY

month_of_year,

hour_of_day ASC NULLS LAST;

These are the first 20 results from the above prompt.

| month_of_year | hour_of_day | percentage |

| ------------- | ----------- | -------------------- |

| 1 | 0 | 0.001466311493438256 |

| 1 | 1 | 0.03446641678514498 |

| 1 | 2 | 0.03949447077409163 |

| 1 | 3 | 0.1693958215697346 |

| 1 | 4 | 0.129366106080207 |

| 1 | 5 | 0.2496931736425579 |

| 1 | 6 | 0.1393328415703631 |

| 1 | 7 | 0.1093124884594079 |

| 1 | 8 | 0.1421310052749239 |

| 1 | 9 | 0.1461574224991583 |

| 1 | 10 | 0.1855634765127407 |

| 1 | 11 | 0.1832745104410268 |

| 1 | 12 | 0.1992595886465525 |

| 1 | 13 | 0.21323772886216 |

| 1 | 14 | 0.2520489502638374 |

| 1 | 15 | 0.2735259206409886 |

| 1 | 16 | 0.2821526799031212 |

| 1 | 17 | 0.2966010457056541 |

Below is a heatmap of the results HEAVY.AI's Immerse visualisation system generated.

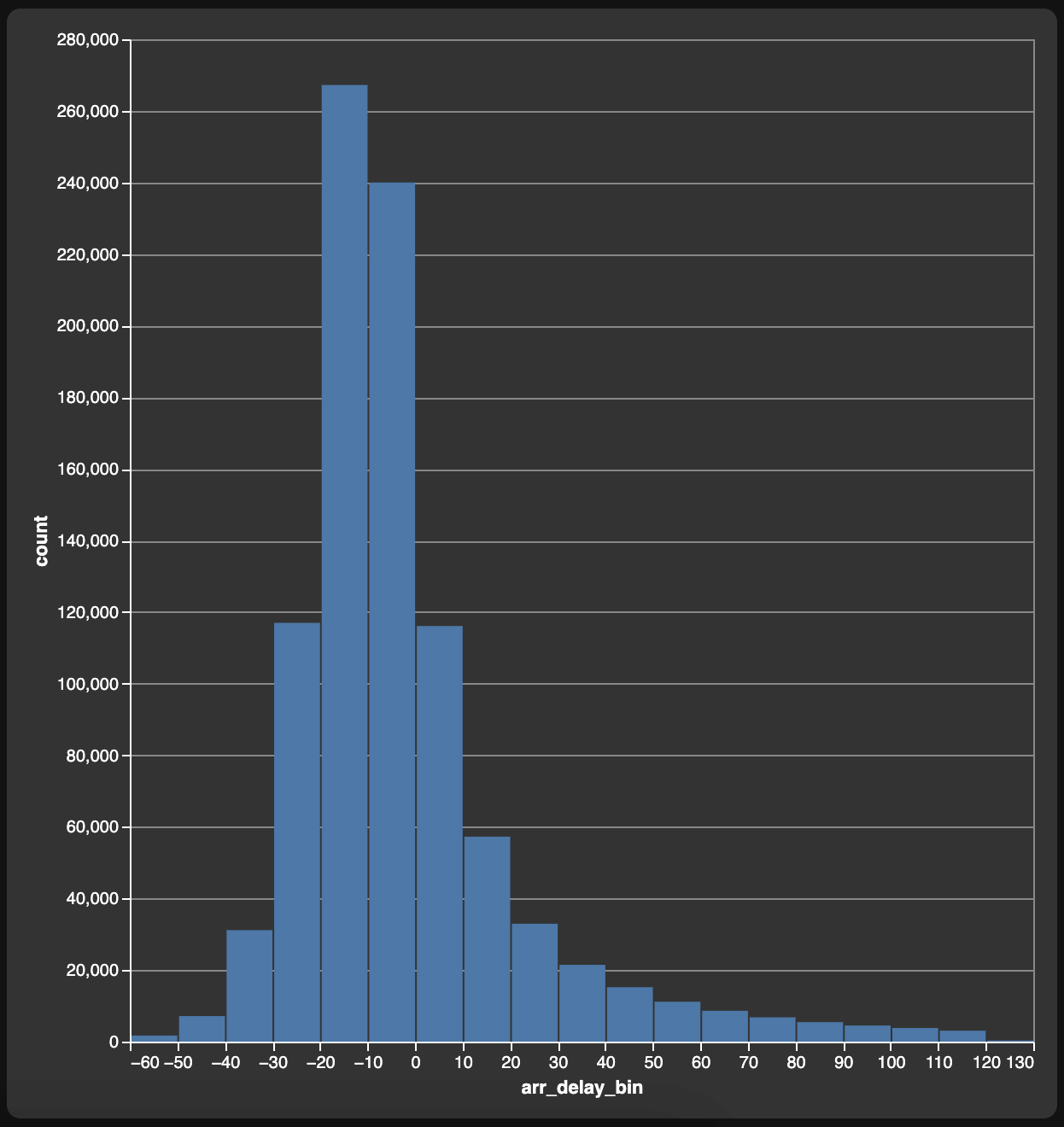

I'll then ask the following:

Show a histogram of arrival delays for Delta Airlines

in 2023 in buckets of 10 minutes from -60 to 120 minutes.

HeavyIQ generated the following SQL.

SELECT

FLOOR(CAST(arr_delay AS DOUBLE) / 10) * 10 AS arr_delay_bin,

COUNT(*) AS "count"

FROM

flights

WHERE

carrier_name = 'Delta Air Lines'

and DATE_TRUNC(YEAR, crs_arr_timestamp) = '2023-01-01 00:00:00'

and arr_delay BETWEEN -60

and 120

GROUP BY

arr_delay_bin

ORDER BY

arr_delay_bin ASC NULLS LAST;

These are the results from the above prompt.

| arr_delay_bin | tot_count |

| ------------- | --------- |

| -60 | 1699 |

| -50 | 7135 |

| -40 | 31139 |

| -30 | 116977 |

| -20 | 267229 |

| -10 | 239993 |

| 0 | 116106 |

| 10 | 57247 |

| 20 | 32923 |

| 30 | 21458 |

| 40 | 15203 |

| 50 | 11148 |

| 60 | 8623 |

| 70 | 6782 |

| 80 | 5441 |

| 90 | 4504 |

| 100 | 3803 |

| 110 | 3054 |

| 120 | 311 |

Below is a bar chart showing the distribution of the above values. This was also generated by Immerse.

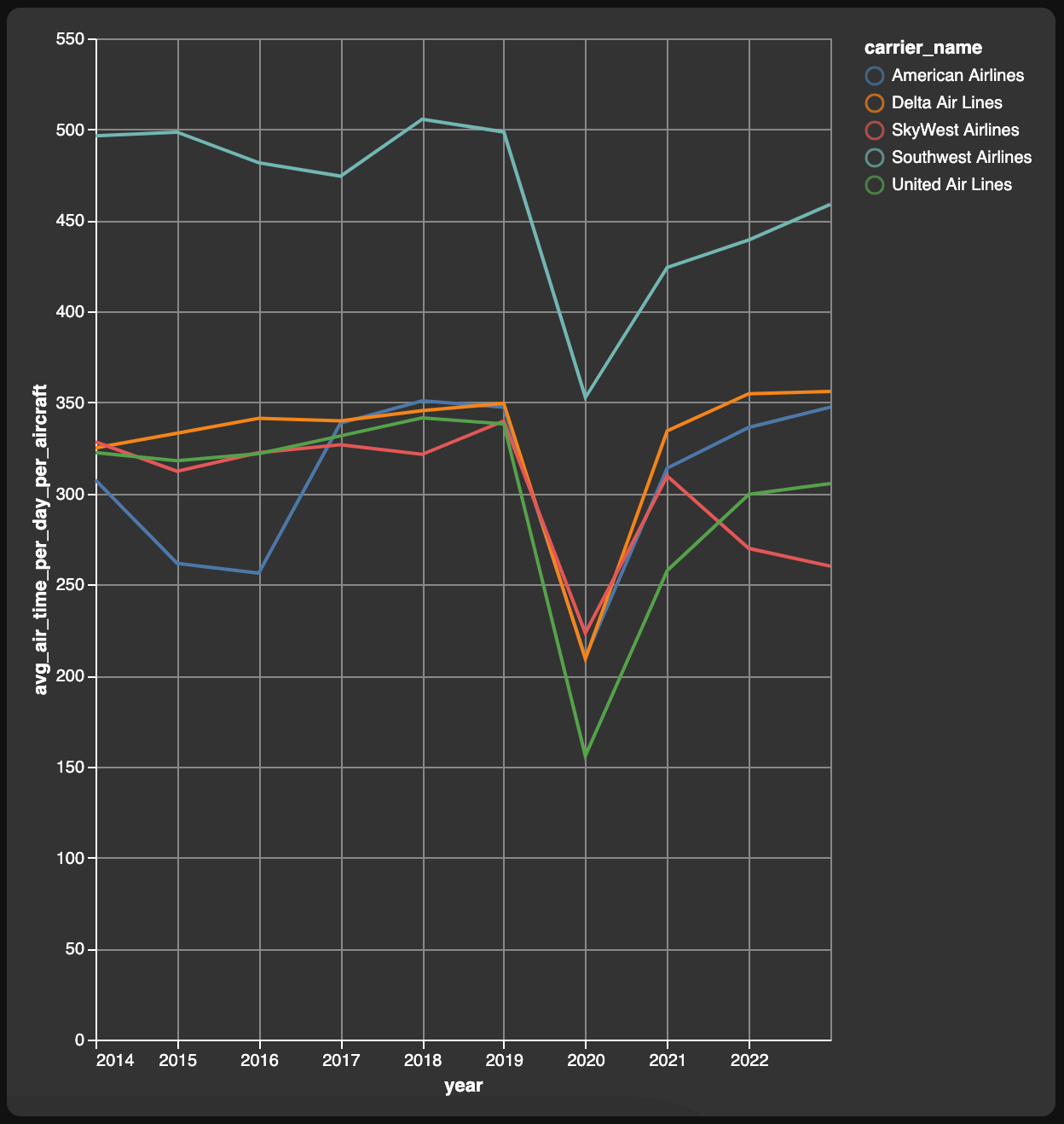

Below is a prompt looking at the number of hours on average each major Airline can keep its fleet in the air.

For each airline with over 300,000 flights in 2023, calculate

the average air time per day (365 days in a year) per aircraft

for each year since 2014. Consider only flights that weren't

cancelled.

HeavyIQ generated the following SQL.

SELECT

DATE_TRUNC(YEAR, crs_dep_timestamp) AS "year",

carrier_name,

CAST(SUM(air_time) AS DOUBLE) / NULLIF(COUNT(DISTINCT tail_num), 0) / 365 AS avg_air_time_per_day_per_aircraft

FROM

flights

WHERE

carrier_name IN (

SELECT

carrier_name

FROM

flights

WHERE

DATE_TRUNC(YEAR, crs_dep_timestamp) = '2023-01-01 00:00:00'

AND carrier_name IS NOT NULL

GROUP BY

carrier_name

HAVING

COUNT(*) > 300000

)

AND cancelled = 0

AND crs_dep_timestamp >= '2014-01-01 00:00:00'

GROUP BY

"year",

carrier_name

ORDER BY

"year",

carrier_name ASC NULLS LAST;

These are the results from the above prompt.

| act_year | carrier_name | avg_air_time_per_day_per_aircraft |

| -------------------- | ------------------ | --------------------------------- |

| 2014-01-01T00:00:00Z | American Airlines | 307.3617289454486 |

| 2014-01-01T00:00:00Z | Delta Air Lines | 325.0075020452211 |

| 2014-01-01T00:00:00Z | SkyWest Airlines | 328.3964120848189 |

| 2014-01-01T00:00:00Z | Southwest Airlines | 496.4657152550999 |

| 2014-01-01T00:00:00Z | United Air Lines | 322.4455494025066 |

| 2015-01-01T00:00:00Z | American Airlines | 261.5670471841705 |

| 2015-01-01T00:00:00Z | Delta Air Lines | 333.0708258884256 |

| 2015-01-01T00:00:00Z | SkyWest Airlines | 312.1633034085625 |

| 2015-01-01T00:00:00Z | Southwest Airlines | 498.4914072229141 |

| 2015-01-01T00:00:00Z | United Air Lines | 318.0415290787149 |

| 2016-01-01T00:00:00Z | American Airlines | 256.2421010092637 |

| 2016-01-01T00:00:00Z | Delta Air Lines | 341.3365227049563 |

| 2016-01-01T00:00:00Z | SkyWest Airlines | 322.4056078767123 |

| 2016-01-01T00:00:00Z | Southwest Airlines | 481.6399413691392 |

| 2016-01-01T00:00:00Z | United Air Lines | 321.8425354713497 |

| 2017-01-01T00:00:00Z | American Airlines | 338.6619623113831 |

...

Below is a prompt looking at route comparison between two Airlines.

Show distinct routes flown by Virgin America in 2016

which were NOT flown by Alaska Airlines in 2023.

HeavyIQ generated the following SQL.

SELECT

DISTINCT T1.origin_airport,

T1.dest_airport

FROM (

SELECT

T2.airport AS origin_airport,

T3.airport AS dest_airport

FROM

flights AS T1

JOIN airports AS T2 ON T1.origin_airport_id = T2.airport_id

JOIN airports AS T3 ON T1.dest_airport_id = T3.airport_id

WHERE

T1.carrier_name = 'Virgin America'

AND DATE_TRUNC(YEAR, T1.crs_dep_timestamp) = '2016-01-01 00:00:00'

) AS T1 LEFT JOIN (

SELECT

T2.airport AS origin_airport,

T3.airport AS dest_airport

FROM

flights AS T1

JOIN airports AS T2 ON T1.origin_airport_id = T2.airport_id

JOIN airports AS T3 ON T1.dest_airport_id = T3.airport_id

WHERE

T1.carrier_name = 'Alaska Airlines'

AND DATE_TRUNC(YEAR, T1.crs_dep_timestamp) = '2023-01-01 00:00:00'

) AS T2 ON T1.origin_airport = T2.origin_airport AND T1.dest_airport = T2.dest_airport

WHERE

T2.origin_airport IS NULL;

These are the results from the above prompt.

| origin_airport | dest_airport |

| -------------- | ------------ |

| LAX | MCO |

| FLL | JFK |

| LAX | JFK |

| LGA | LAS |

| DAL | LAS |

| DAL | LGA |

| LAX | BOS |

| LAX | ORD |

| LAS | DAL |

| LGA | DAL |

| DCA | DAL |

| LAX | DAL |

| SFO | DEN |

| DEN | SFO |

| PSP | SFO |

| DAL | DCA |

| JFK | FLL |

| MCO | LAX |

| JFK | LAX |

| BOS | LAX |

| ONT | LAX |

| ORD | LAX |

| DAL | LAX |

| SFO | PSP |

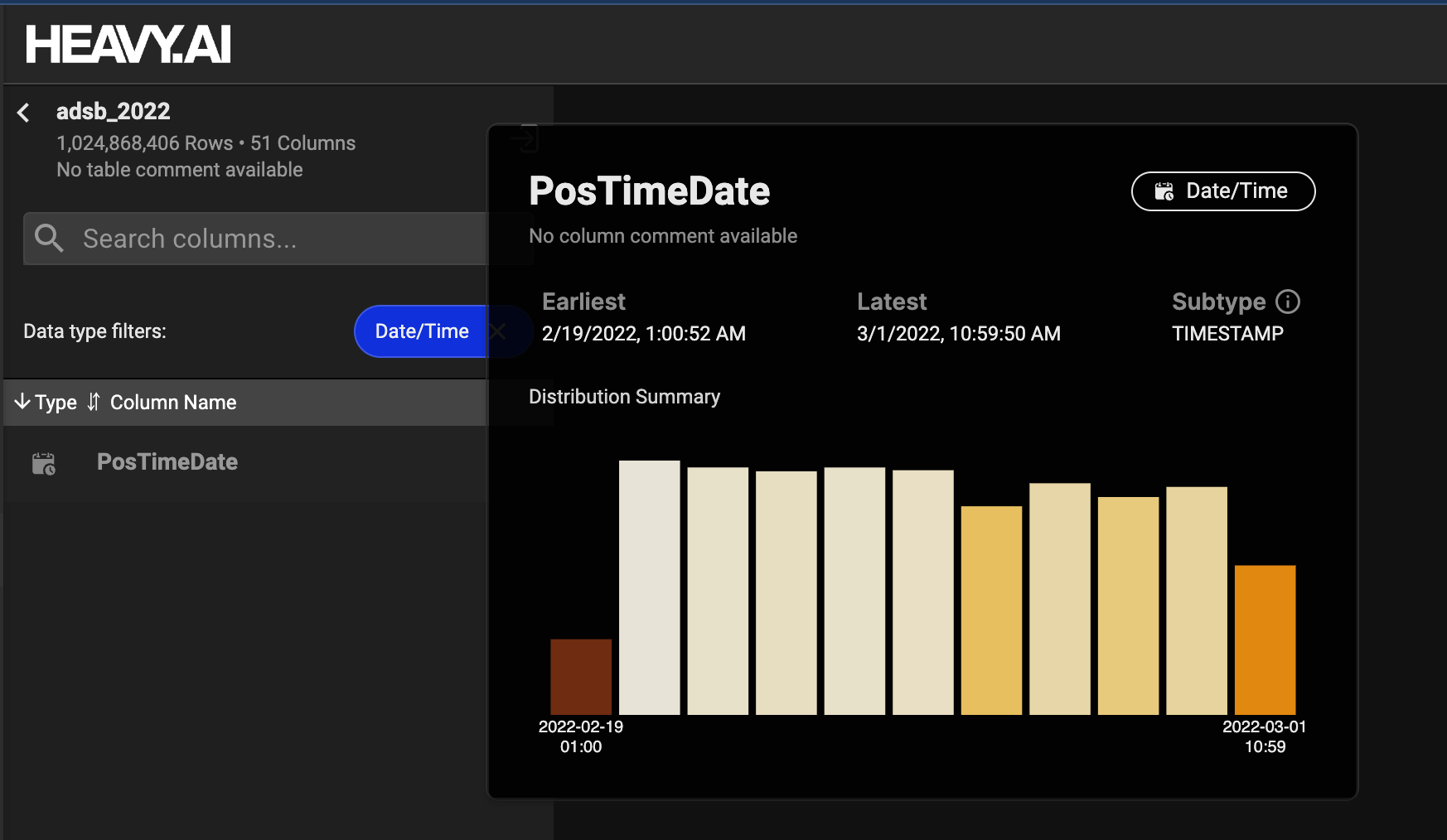

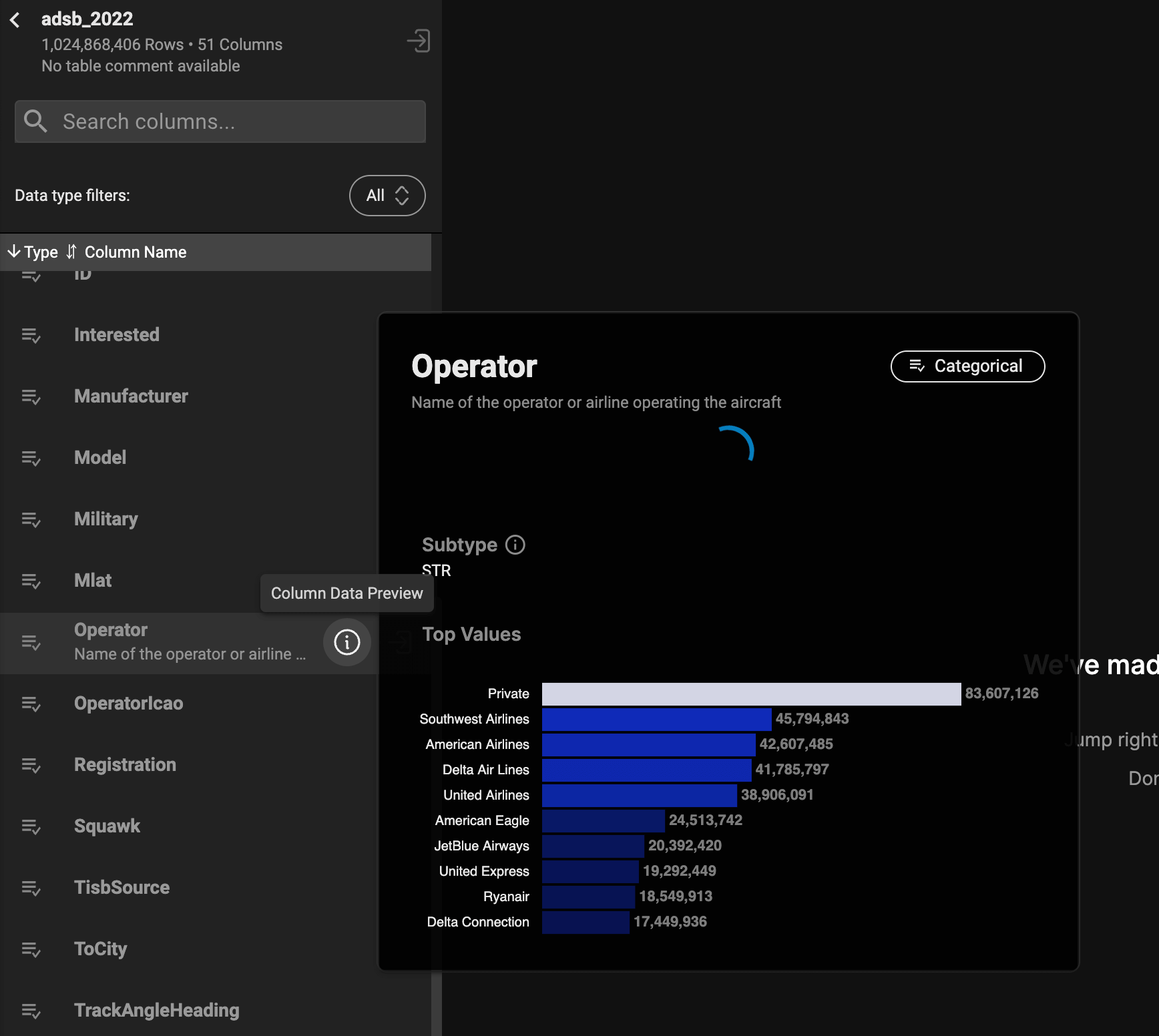

The Immerse interface will also show the value distribution of any columns you're working with. Here's one example showing distinct values being counted.

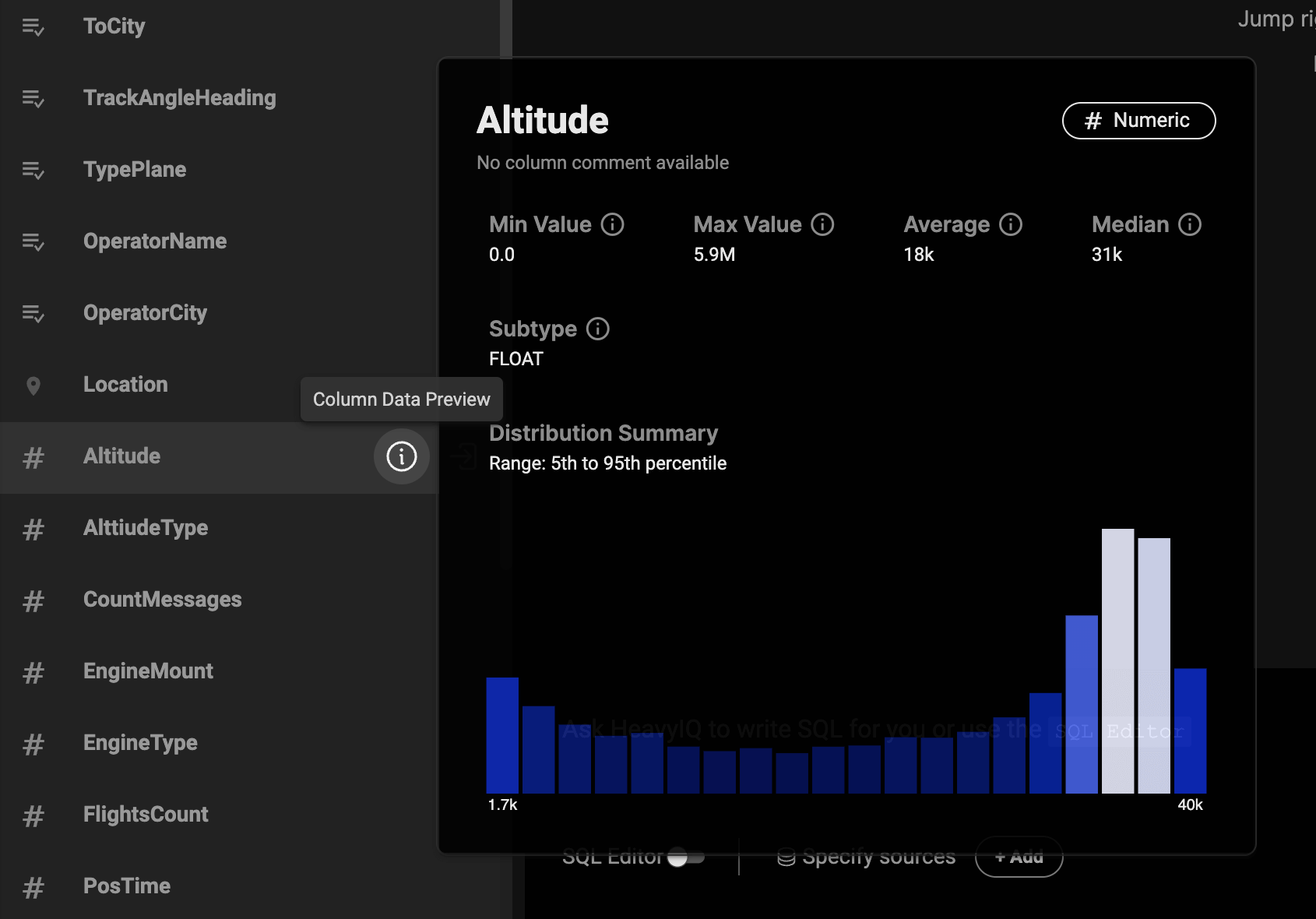

This one shows the distributions of values for a floating-point field.

This one shows the distributions of values for a timestamp field.