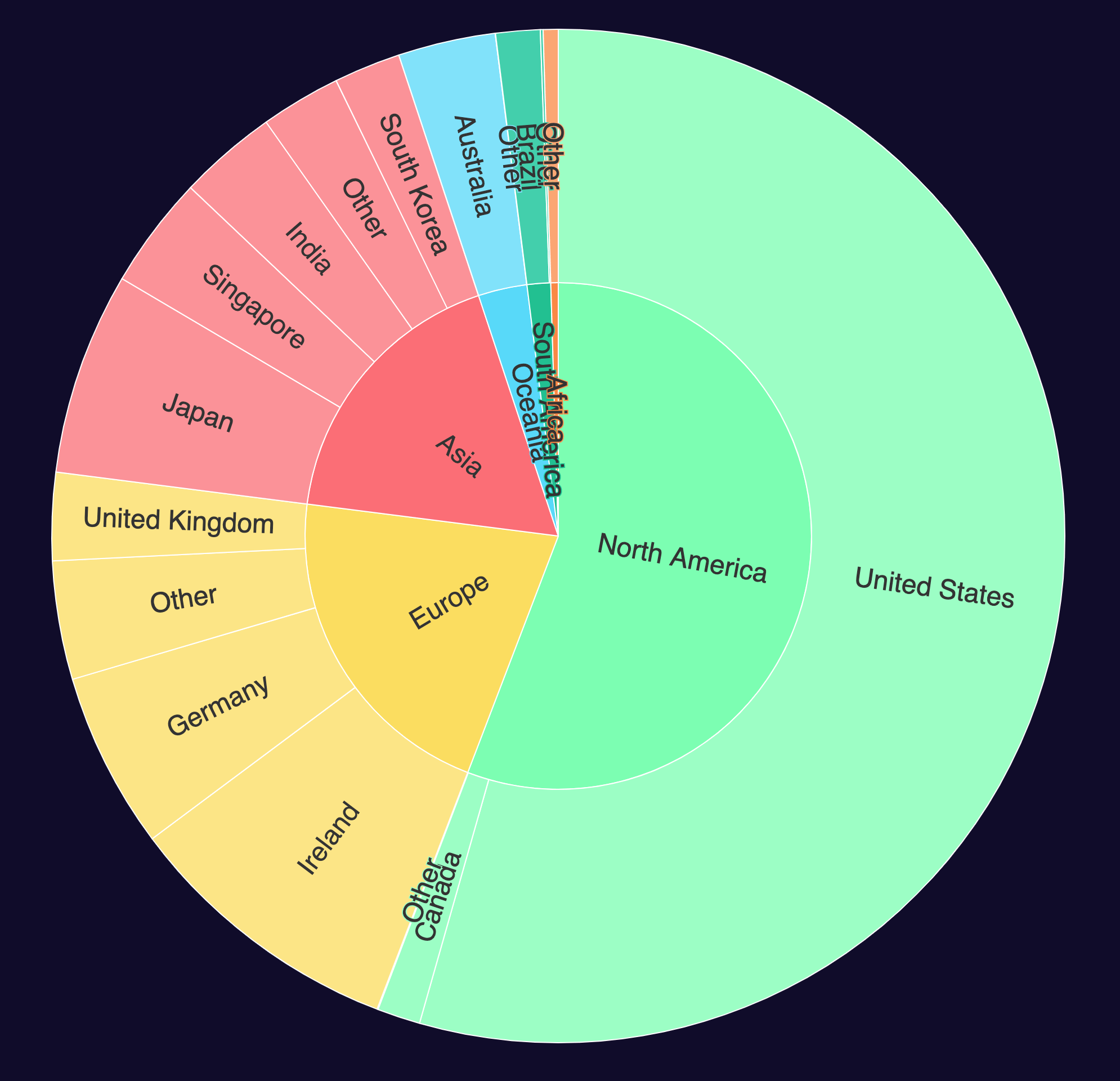

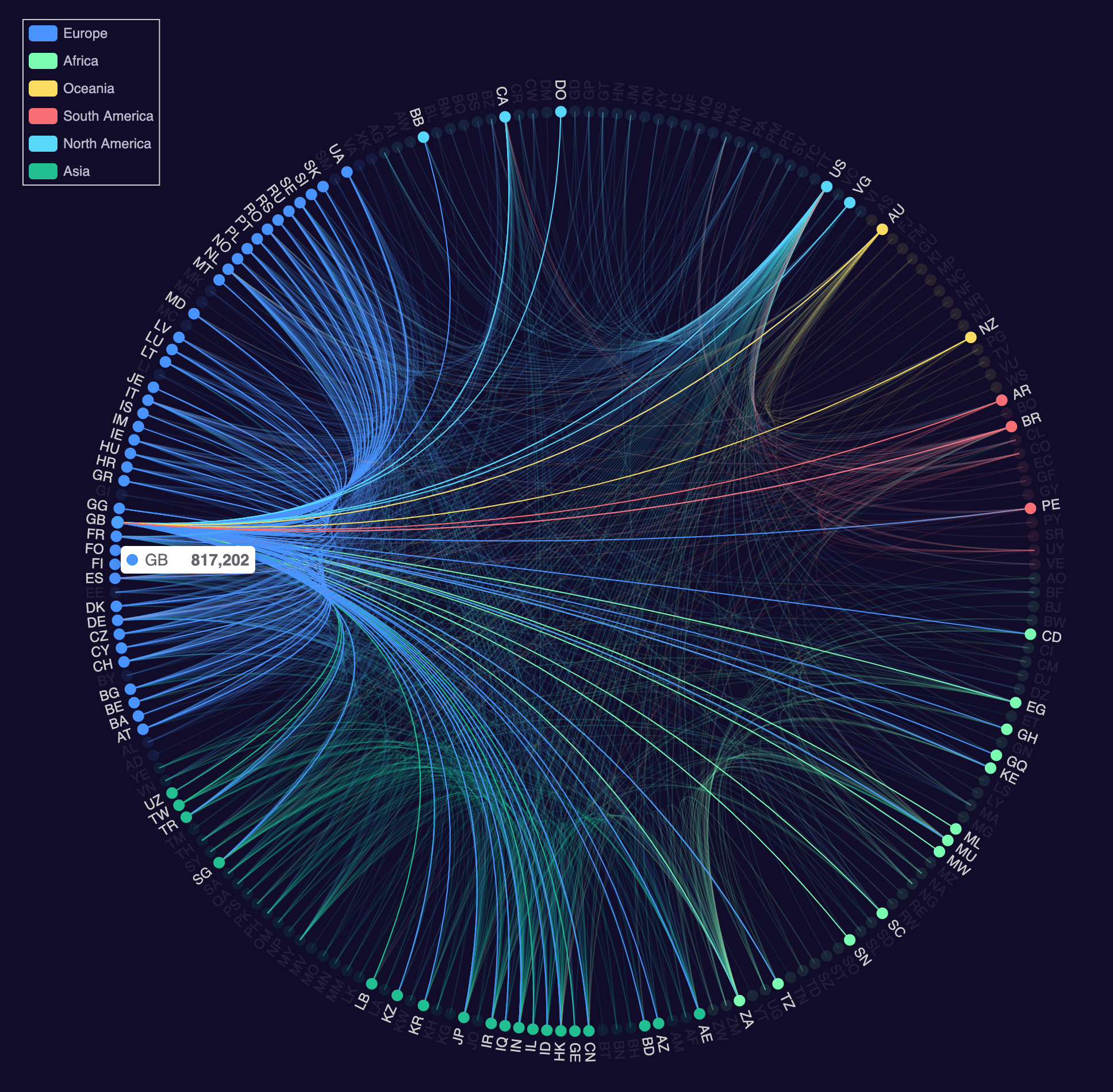

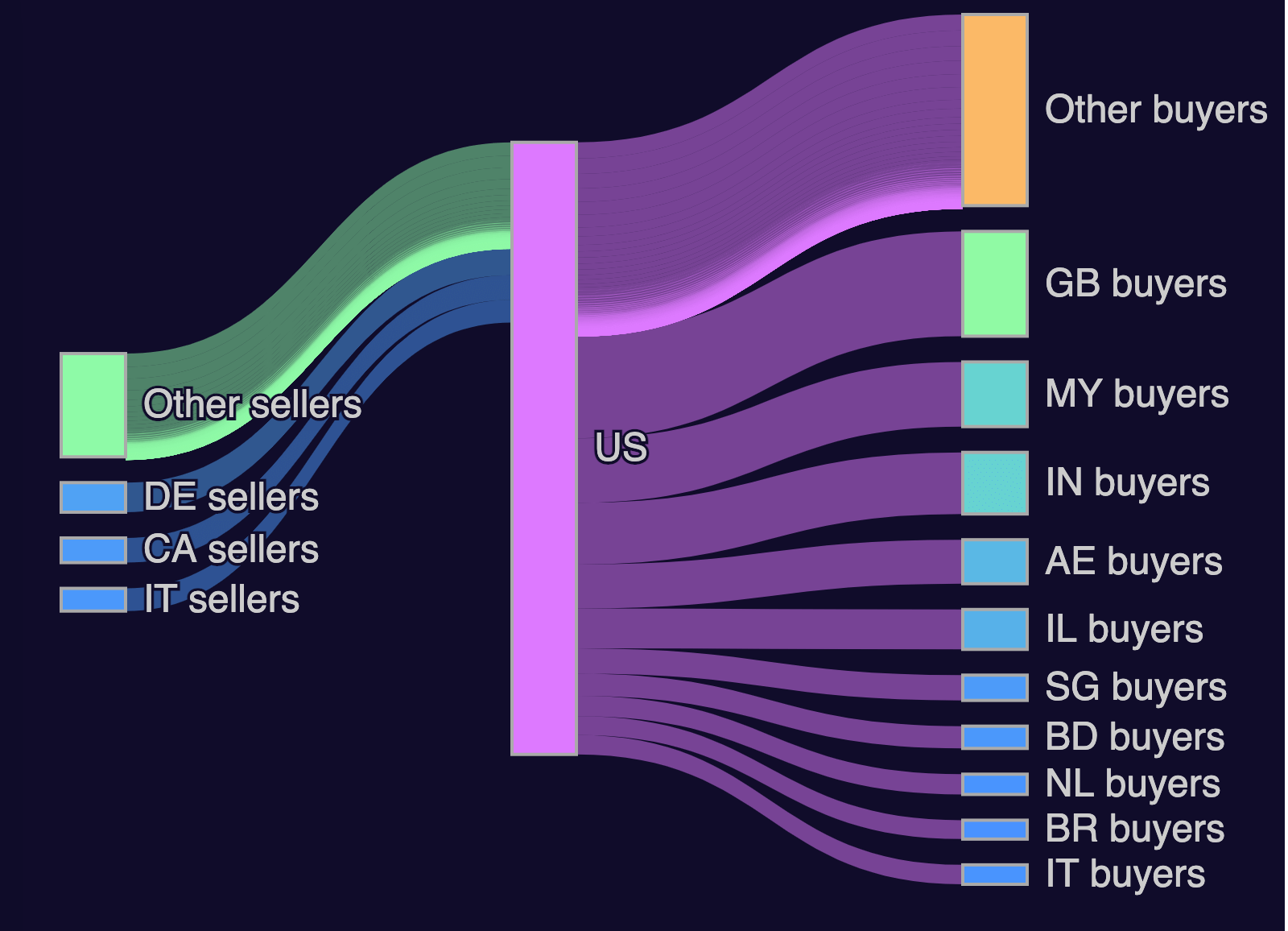

IPv4s are a tradable commodity. Recently sales have priced an IPv4 address at more than $45 if sold in the right block size. This puts the market capitalisation of the allocated IPv4 spectrum at $135B. If IPv4s were a publicly traded firm, they'd be in the world's top 100 in terms of market cap. Below is a chart of the UK's IPv4 trading partners for March.

In 2013, Ben Dowling began looking into ways to detect IP address locations. This was shortly after he arrived in the US from the UK and was then working for Facebook. In 2016, after a stint as the CTO of the meditation app Calm, he decided to turn his hobby into a business and started IPinfo, a firm that builds datasets of IPv4 and IPv6 metadata.

Ben's first customer was Tesla and from there he managed to win deals with Cloudflare, Microsoft and Tencent to name a few. Today IPinfo supplies data to over 400K businesses around the world. Their API had 1.2 trillion hits last year, almost 2.5x more than the previous year.

IPinfo employs 35 members of staff that work to produce an accurate, daily census of IP address physical locations and ownership. Their feeds contain coordinates and city-level address details, ownership and abuse contacts as well as reputation and usage data. This can be handy for identifying VPN users, automated bots run from hosting centres and domain names associated with a given IP address.

Below is an example of this site's IPv4 address.

$ curl -S ipinfo.io/146.185.174.209?token=obscured

{

"ip": "146.185.174.209",

"hostname": "www.marksblogg.com",

"city": "Amsterdam",

"region": "North Holland",

"country": "NL",

"loc": "52.3740,4.8897",

"org": "AS14061 DigitalOcean, LLC",

"postal": "1012",

"timezone": "Europe/Amsterdam",

"asn": {

"asn": "AS14061",

"name": "DigitalOcean, LLC",

"domain": "digitalocean.com",

"route": "146.185.160.0/20",

"type": "hosting"

},

"company": {

"name": "Digital Ocean, Inc.",

"domain": "digitalocean.com",

"type": "hosting"

},

"privacy": {

"vpn": false,

"proxy": false,

"tor": false,

"relay": false,

"hosting": true,

"service": ""

},

"abuse": {

"address": "DigitalOcean, LLC, 101 Avenue of the Americas, 10th Floor, New York, NY, 10013, United States of America",

"country": "US",

"email": "abuse@digitalocean.com",

"name": "Abuse Department",

"network": "146.185.168.0/21",

"phone": "+13478756044"

},

"domains": {

"ip": "146.185.174.209",

"total": 1,

"domains": [

"marksblogg.com"

]

}

}

Back in 2016, IPinfo started by only offering a free API as they were focused on winning software developers over. It was only their commercial offering that allowed customers to download their data in MMDB, CSV and JSON format. When looking up millions or billions of records an API creates too much overhead. Having a local file allows much faster lookups.

In March, IPinfo began offering a free, file-based country-level dataset download for IPv4 and IPv6 addresses. This file is the result of over 900 TB of data on BigQuery being synthesized down into a file a few MB in size. The downloads are refreshed daily so changes in locations and ownership can be seen with 24-hour granularity.

In this blog post, I'll walk through downloading this dataset as well as looking at some interesting IP address space analysis that it can be used for.

Installing Prerequisites

I've installed Homebrew on my 2020 MacBook Pro and I'll use it to install Python and DuckDB.

$ brew install \

csvkit \

duckdb \

jq \

virtualenv

Below are three Python libraries I'll use throughout this post.

$ virtualenv ~/.ipinfo

$ source ~/.ipinfo/bin/activate

$ pip install \

emoji-country-flag \

geoip2 \

pyecharts

Downloading the Free Dataset

The dataset is distributed in CSV, JSON and MMDB formats.

After signing up for a free account, you'll be given an API token. Replace <token> in the URL below with the one you've been assigned. The URL points to the latest release and its contents are refreshed daily.

The following will download the JSON version of the dataset.

$ curl -L 'https://ipinfo.io/data/free/country_asn.json.gz?token=<token>' \

--output country_asn.latest.json.gz

The CSV version of this dataset can be downloaded and decompressed with the following URL.

$ curl -L 'https://ipinfo.io/data/free/country_asn.csv.gz?token=<token>' \

--output country_asn.latest.csv.gz

$ gunzip country_asn.latest.csv.gz

If you would rather download with a web browser, there is a downloads page as well.

An Example Record

The JSON file has around one million line-delimited records. Below is a formatted version of the first record.

$ gunzip -c country_asn.latest.json.gz \

| head -n1 \

| jq -S .

{

"as_domain": "cloudflare.com",

"as_name": "Cloudflare, Inc.",

"asn": "AS13335",

"continent": "OC",

"continent_name": "Oceania",

"country": "AU",

"country_name": "Australia",

"end_ip": "1.0.0.0",

"start_ip": "1.0.0.0"

}

Importing into ClickHouse

ClickHouse supports importing JSON and this format avoids delimiter pitfalls that are common with CSVs. Below I'll create a destination table and then decompress and import the JSON file into the table.

$ clickhouse client

CREATE OR REPLACE TABLE ipinfo_country_asn (

as_domain String,

as_name String,

asn String,

continent String,

continent_name String,

country String,

country_name String,

start_ip String,

end_ip String

) ENGINE=Log;

$ gunzip -c country_asn.latest.json.gz \

| clickhouse client \

-q 'INSERT INTO ipinfo_country_asn

FORMAT JSONEachRow'

ClickHouse has column types optimised for IPv4 and IPv6 addresses. Below I'll create separate tables for the IPv4 and IPv6 records.

$ clickhouse client

CREATE OR REPLACE TABLE ipinfo_ips ENGINE=Log AS

SELECT

as_domain,

as_name,

asn,

continent,

continent_name,

country,

country_name,

CAST(start_ip AS IPv4) start_ipv4,

CAST(end_ip AS IPv4) end_ipv4,

CAST(splitByChar('.', start_ip)[1] AS UInt8) class_a,

CAST(CAST(end_ip AS IPv4) AS UInt32) -

CAST(CAST(start_ip AS IPv4) AS UInt32) + 1 num_ips

FROM ipinfo_country_asn

WHERE start_ip NOT LIKE '%:%'

AND end_ip NOT LIKE '%:%';

CREATE OR REPLACE TABLE ipinfo_ipv6 ENGINE=Log AS

SELECT

as_domain,

as_name,

asn,

continent,

continent_name,

country,

country_name,

CAST(start_ip AS IPv6) start_ipv6,

CAST(end_ip AS IPv6) end_ipv6

FROM ipinfo_country_asn

WHERE start_ip LIKE '%:%'

AND end_ip LIKE '%:%';

Importing into BigQuery

BigQuery doesn't have a dedicated IP address data type so the following will load every column in as a STRING.

$ bq load \

--autodetect \

--source_format=NEWLINE_DELIMITED_JSON \

dataset.ipinfo_free \

./country_asn.latest.json.gz

BigQuery's Net functions can be used to perform IP address-specific tasks on the start_ip and end_ip columns.

Note, both IPv4 and IPv6 addresses live in the start_ip and end_ip columns so if you need to work on only one version matching on a colon in the field will determine if it's IPv4 or IPv6.

SELECT NET.IPV4_TO_INT64(start_ip)

FROM dataset.ipinfo_free

WHERE start_ip NOT LIKE '%:%'

LIMIT 10;

Importing into DuckDB

The official release of DuckDB doesn't yet pre-bundle the inet extension so the start_ip and end_ip columns will be loaded in as VARCHARs.

$ duckdb ipinfo.duckdb

CREATE OR REPLACE TABLE ipinfo_free AS

SELECT *

FROM read_ndjson_auto('country_asn.latest.json.gz');

Importing into DataBricks

DataBricks works well with Parquet files. The following will convert the GZIP-compressed JSON file into a Snappy-compressed Parquet file using DuckDB.

$ echo "COPY (SELECT *

FROM read_ndjson_auto('country_asn.latest.json.gz'))

TO 'country_asn.latest.pq' (FORMAT 'PARQUET',

CODEC 'Snappy');" \

| duckdb

Importing into PostgreSQL

The CSV-formatted distribution of the dataset is easier to import into PostgreSQL than the JSON version. I'll create a new database and table and populate it with the CSV file.

$ createdb ipinfo_free

$ psql ipinfo_free

CREATE TABLE country_asn_latest (

start_ip INET,

end_ip INET,

country VARCHAR(2),

country_name TEXT,

continent VARCHAR(2),

continent_name TEXT,

asn VARCHAR(9),

as_name TEXT,

as_domain TEXT);

\copy country_asn_latest from 'country_asn.latest.csv' CSV HEADER

I'll create unique constraints on the start_ip and end_ip columns.

ALTER TABLE country_asn_latest

ADD CONSTRAINT uniq_start_ip UNIQUE (start_ip);

ALTER TABLE country_asn_latest

ADD CONSTRAINT uniq_end_ip UNIQUE (end_ip);

I'll add an index that should make searching through the table quicker than it would be otherwise.

CREATE INDEX ip_ranges_inverse

ON country_asn_latest ("start_ip", "end_ip" DESC);

ALTER TABLE country_asn_latest

CLUSTER ON ip_ranges_inverse;

VACUUM ANALYZE country_asn_latest;

Importing into SQLite

SQLite3 only supports five data types so every field will be imported as a TEXT field.

$ sqlite3 ipinfo_free.db

.mode csv

.separator ","

.import country_asn.latest.csv country_asn_latest

Importing into any other Database

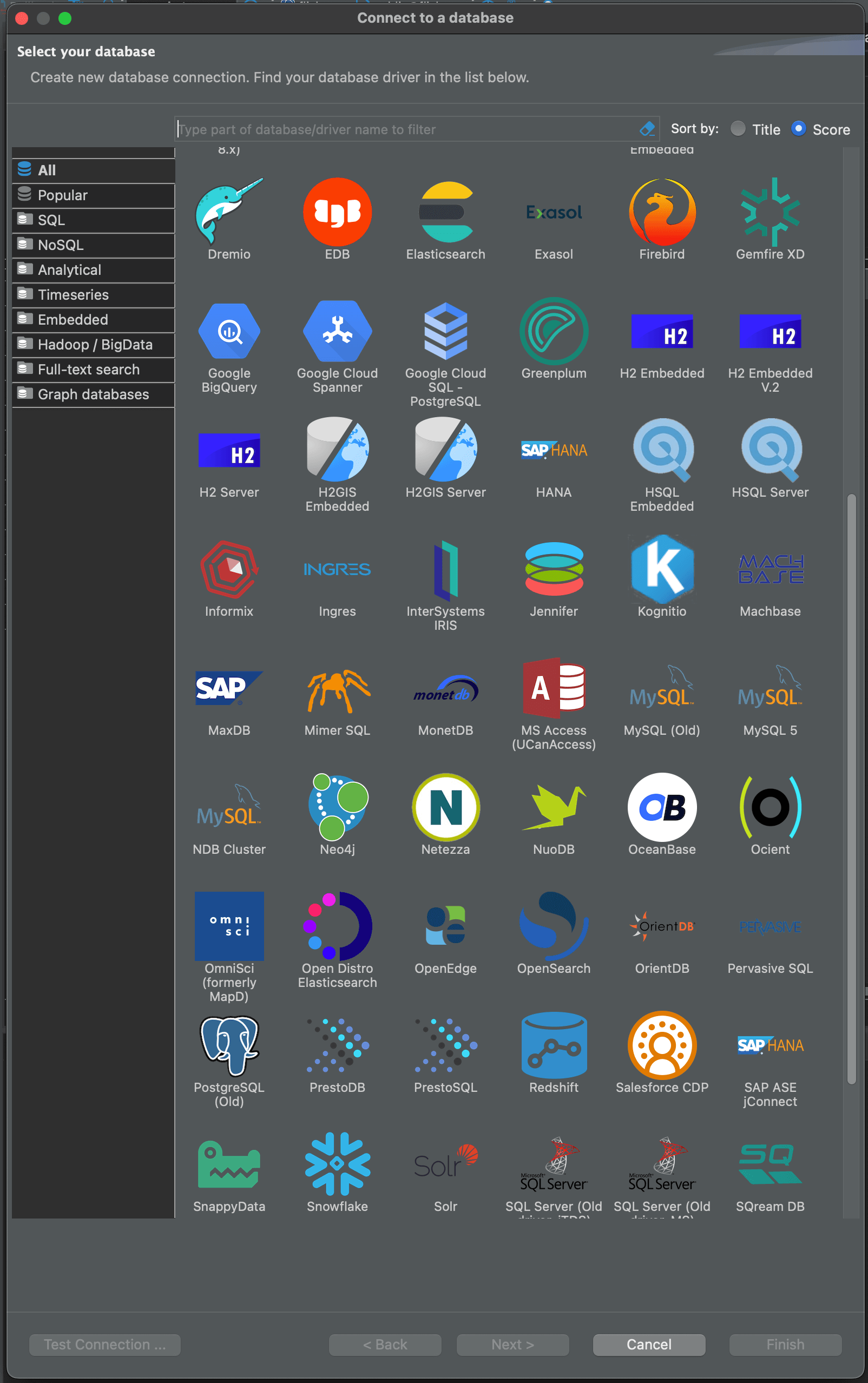

DBeaver is a free, open source, GUI application that can connect to more than 100 different database engines. It can run on macOS, Windows and Linux. It makes it very easy to connect to remote databases and import data from files on your local system.

When you launch the app, create a connection to your destination database.

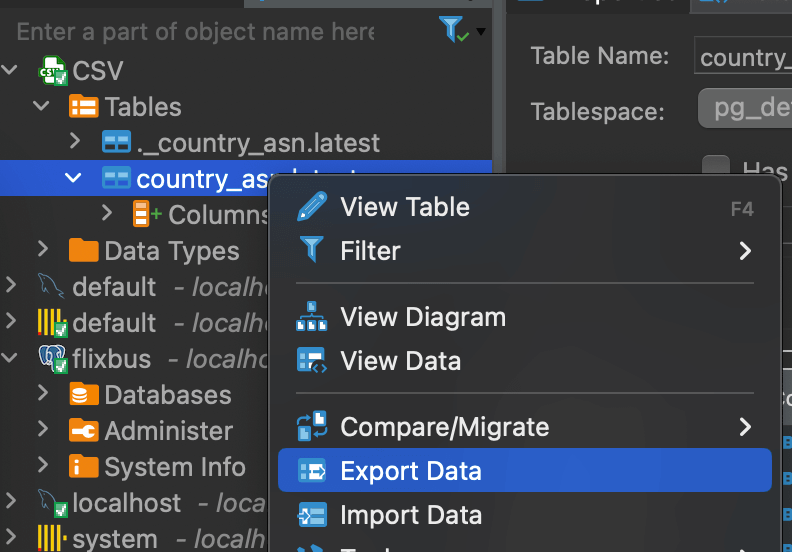

Then, create another new connection and select "CSV" as the database type. Choose country_asn.latest.csv as the source of data.

Right-click on the country_asn.latest in the connections tree on the left of the screen and choose "Export Data". The first screen should have country_asn.latest selected, click "Next".

The target container at the top will have a drop-down of any existing database connections configured. If your destination database isn't already selected, click the "Choose" button in the top right to find it.

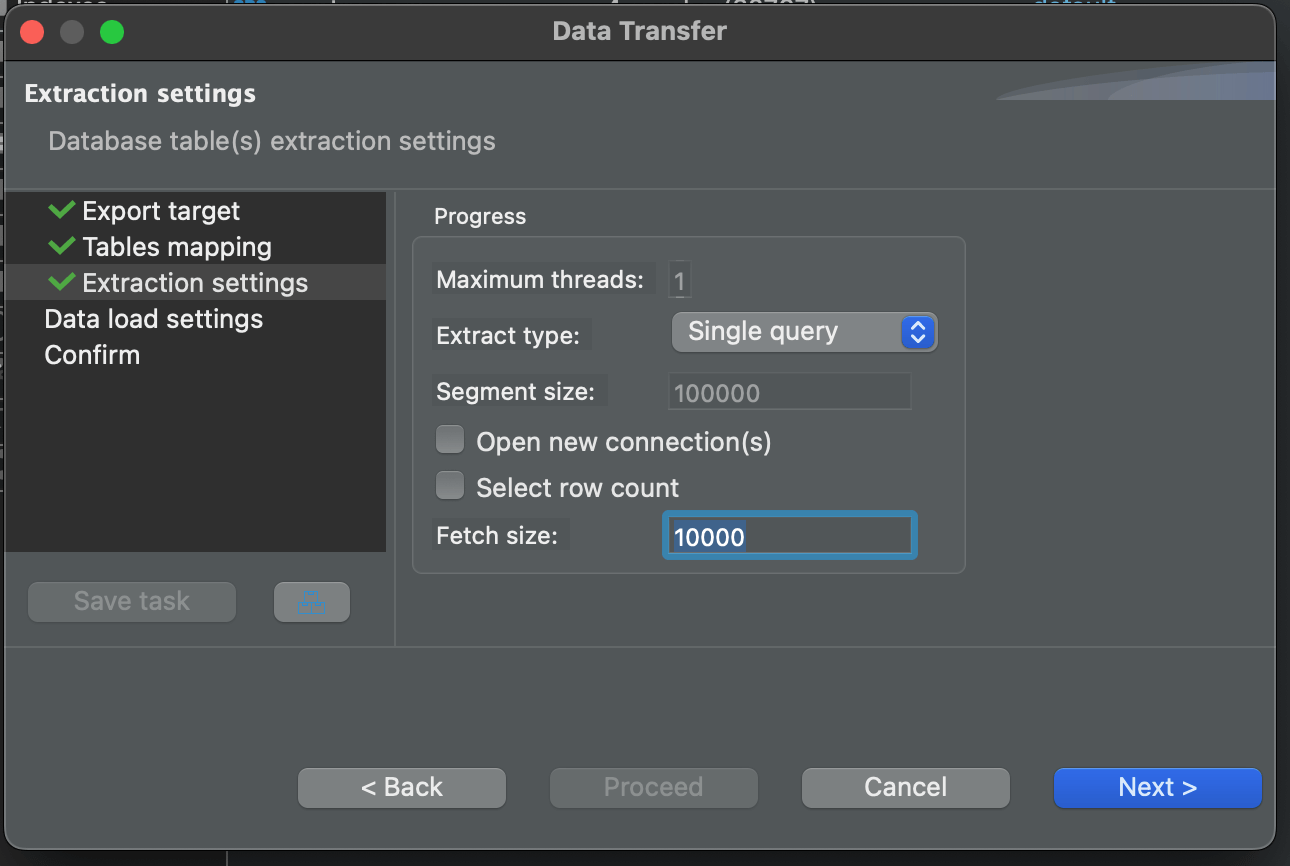

You'll be offered a chance to change the default extraction settings for your database. Below were the options given to me for PostgreSQL. Hit the "Next" button.

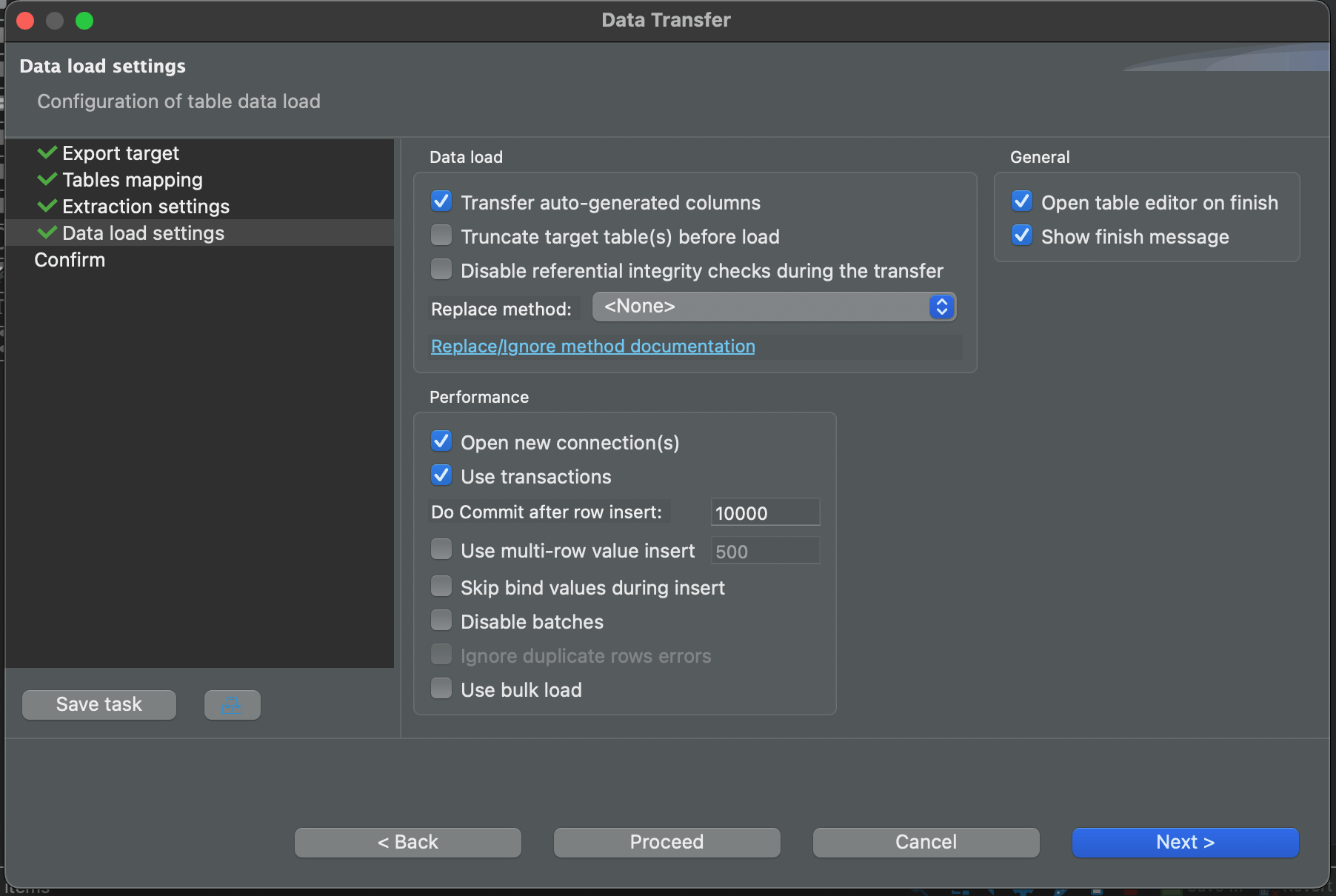

You'll then be offered a chance to change the load settings for your target database. Below are the ones given for PostgreSQL.

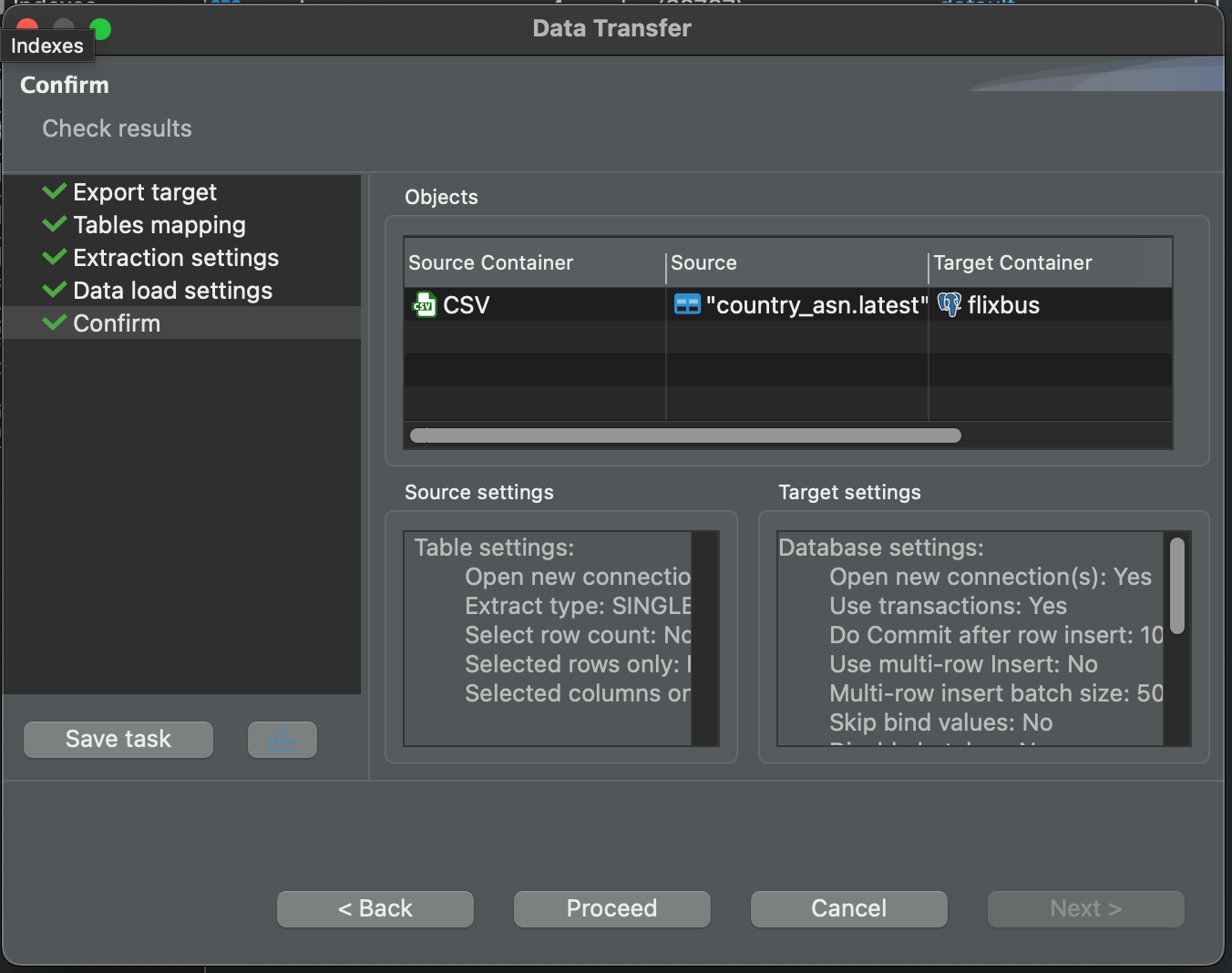

Then finally, you'll be shown a summary. Click "Proceed" at the bottom of the screen and the CSV data will be loaded into your database.

More Accurate Locations

Two years ago I completed a consulting engagement with IPinfo where we worked on the probe network. I published a blog post on how it was able to reveal an IP's location better than any other data source available.

I stopped relying on MaxMind for city data in my other projects after seeing comparisons to IPinfo's efforts. Even if their city-level data is free, I've seen so many issues at the country level that it's a non-starter.

One of IPinfo's prospects is spending $500K / year across five data providers for IP location data. They stated that they found IPinfo's feed more accurate than their efforts.

Below I'll compare the May 10th release of MaxMind's free GeoLite2 country-level dataset against IPinfo's free country-level release for May 9th.

$ vi compare.py

import gzip

import json

import socket

import struct

import geoip2.database

from geoip2.errors import AddressNotFoundError

def ip2int(addr):

return struct.unpack("!I", socket.inet_aton(addr))[0]

ipinfo_file = 'country_asn_v1.2023-05-21.json.gz'

maxmind_file = 'GeoLite2-Country_20230519/GeoLite2-Country.mmdb'

reader = geoip2.database.Reader(maxmind_file)

for line in gzip.open(ipinfo_file):

ipinfo_rec = json.loads(line)

if ':' in ipinfo_rec['start_ip']: # Skip IPv6

continue

num_ips = ip2int(ipinfo_rec['end_ip']) - \

ip2int(ipinfo_rec['start_ip']) + 1

try:

resp = reader.country(ipinfo_rec['start_ip'])

except AddressNotFoundError:

print(json.dumps({

'ipv4_not_found': True,

'start_ip': ipinfo_rec['start_ip'],

'end_ip': ipinfo_rec['end_ip'],

'matched': False,

'num_ips': num_ips}))

continue

if not resp.country.iso_code:

print(json.dumps({

'country_not_found': True,

'start_ip': ipinfo_rec['start_ip'],

'end_ip': ipinfo_rec['end_ip'],

'matched': False,

'num_ips': num_ips}))

continue

if resp.country.iso_code.lower() != ipinfo_rec['country'].lower():

print(json.dumps({

'start_ip': ipinfo_rec['start_ip'],

'end_ip': ipinfo_rec['end_ip'],

'matched': False,

'num_ips': num_ips,

'ipinfo_answer': ipinfo_rec,

'maxmind_answer': resp.raw}))

else:

print(json.dumps({

'start_ip': ipinfo_rec['start_ip'],

'end_ip': ipinfo_rec['end_ip'],

'matched': True,

'num_ips': num_ips}))

I'll run the following which will produce a line-delimited JSON file containing the results of every IPinfo record's lookup against the MaxMind dataset.

$ python compare.py > comparison.jsonl

I'll import those results into DuckDB.

$ duckdb ipv4_comp.duckdb

CREATE OR REPLACE TABLE ipv4_comp AS

SELECT *

FROM read_ndjson_auto('comparison.jsonl',

auto_detect=true,

sample_size=-1);

Three billion IPv4 addresses matched at the country level between the two datasets while almost 118 million didn't.

SELECT matched,

SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

GROUP BY 1;

┌─────────┬────────────┐

│ matched │ num_ips │

│ boolean │ int64 │

├─────────┼────────────┤

│ true │ 3582188753 │

│ false │ 117814408 │

└─────────┴────────────┘

Below are the 80K+ IPv4 addresses broken down by country IPinfo believes are being used in Iran that MaxMind says aren't.

SELECT maxmind_answer.country.names.en,

SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

WHERE matched = false

AND ipinfo_answer.country = 'IR'

GROUP BY 1

ORDER BY 2 DESC;

┌──────────────────────┬─────────┐

│ en │ num_ips │

│ varchar │ int64 │

├──────────────────────┼─────────┤

│ United States │ 25802 │

│ Hong Kong │ 17154 │

│ United Kingdom │ 15359 │

│ Switzerland │ 4096 │

│ Moldova │ 3840 │

│ Netherlands │ 3703 │

│ United Arab Emirates │ 2627 │

│ Turkey │ 1919 │

│ Russia │ 1536 │

│ Ukraine │ 1024 │

│ Romania │ 1024 │

│ France │ 1024 │

│ Germany │ 784 │

│ Poland │ 512 │

│ Australia │ 384 │

│ Canada │ 288 │

│ Mauritius │ 256 │

│ Georgia │ 256 │

│ Lithuania │ 256 │

│ Japan │ 58 │

│ Austria │ 52 │

├──────────────────────┴─────────┤

│ 21 rows 2 columns │

└────────────────────────────────┘

There were over ~34M IPv4 addresses that MaxMind didn't have any record of.

SELECT SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

WHERE ipv4_not_found = true;

┌──────────┐

│ num_ips │

│ int64 │

├──────────┤

│ 33890979 │

└──────────┘

There were over 2.4M IPv4s which MaxMind had a record of but didn't know which country they were being used from.

SELECT SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

WHERE country_not_found = true;

┌─────────┐

│ num_ips │

│ int64 │

├─────────┤

│ 2474993 │

└─────────┘

Every continent has millions of IPv4s that don't match.

SELECT ipinfo_answer.continent_name,

SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

WHERE matched = false

AND LENGTH(ipinfo_answer.continent_name)

GROUP BY 1

ORDER BY 2 DESC;

┌────────────────┬──────────┐

│ continent_name │ num_ips │

│ varchar │ int64 │

├────────────────┼──────────┤

│ Europe │ 31389647 │

│ North America │ 27824491 │

│ Asia │ 13495148 │

│ Africa │ 5140919 │

│ Oceania │ 2039628 │

│ South America │ 1558603 │

└────────────────┴──────────┘

These mismatches involve some of the world's most well-known technology firms.

SELECT ipinfo_answer.as_domain,

SUM(num_ips)::int64 AS num_ips

FROM ipv4_comp

WHERE matched = false

AND LENGTH(ipinfo_answer.as_domain)

GROUP BY 1

ORDER BY 2 DESC

LIMIT 25;

┌─────────────────────┬─────────┐

│ as_domain │ num_ips │

│ varchar │ int64 │

├─────────────────────┼─────────┤

│ microsoft.com │ 5581277 │

│ verizonbusiness.com │ 3530842 │

│ lilly.com │ 2816884 │

│ mail.mil │ 2302500 │

│ lumen.com │ 1974866 │

│ orange.com │ 1971795 │

│ cogentcomm.biz │ 1844166 │

│ amazon.com │ 1717345 │

│ gtt.net │ 1484818 │

│ akamai.com │ 1378059 │

│ africaoncloud.net │ 1113072 │

│ softlayer.com │ 980358 │

│ nokia.com │ 770068 │

│ multacom.com │ 760320 │

│ bt.com │ 733476 │

│ ovhcloud.com │ 598571 │

│ tele2.se │ 583465 │

│ vodafone.it │ 534272 │

│ apple.com │ 528547 │

│ att.com │ 522777 │

│ google.com │ 522269 │

│ seacom.com │ 350852 │

│ clayer.net │ 327168 │

│ liquidtelecom.com │ 311140 │

│ colt.net │ 262034 │

├─────────────────────┴─────────┤

│ 25 rows 2 columns │

└───────────────────────────────┘

Based on my detailed analysis I'm lead to believe that IPinfo's geolocation data is vastly more accurate than Maxmind's.

How often do IPs change hands?

Below I'll import a few releases of the dataset. These were downloaded on the date of each release. I'll run them through the following enrichment script to determine if a record is for IPv4 or IPv6, get the number of useable IPv4s, pre-bake the class A number as a field and bake the country's flag as an emoji for use later on.

$ vi enrich.py

import ipaddress

import json

import sys

import flag

for line in sys.stdin:

rec = json.loads(line)

rec['emoji'] = flag.flag(rec['country'])

rec['is_ipv4'] = ':' not in rec['start_ip']

if rec['is_ipv4']:

rec['num_ips'] = int(ipaddress.IPv4Address(rec['end_ip'])) - \

int(ipaddress.IPv4Address(rec['start_ip'])) + 1

rec['class_a'] = int(rec['start_ip'].split('.')[0])

else:

rec['num_ips'] = int(ipaddress.IPv6Address(rec['end_ip'])) - \

int(ipaddress.IPv6Address(rec['start_ip'])) + 1

print(json.dumps(rec))

Below I'll decompress, enrich and import each file into DuckDB.

$ for MMDD in 03-06 03-27 05-09 05-21; do

gunzip -c country_asn_v1.2023-$MMDD.json.gz \

| python3 enrich.py \

> country_asn_v1.enriched.2023-$MMDD.json

done

$ duckdb deals.duckdb

CREATE OR REPLACE TABLE ipinfo_country_asn_20230306 AS

SELECT *

FROM read_ndjson_auto('country_asn_v1.enriched.2023-03-06.json',

sample_size=-1);

CREATE OR REPLACE TABLE ipinfo_country_asn_20230327 AS

SELECT *

FROM read_ndjson_auto('country_asn_v1.enriched.2023-03-27.json',

sample_size=-1);

CREATE OR REPLACE TABLE ipinfo_country_asn_20230509 AS

SELECT *

FROM read_ndjson_auto('country_asn_v1.enriched.2023-05-09.json',

sample_size=-1);

CREATE OR REPLACE TABLE ipinfo_country_asn_20230521 AS

SELECT *

FROM read_ndjson_auto('country_asn_v1.enriched.2023-05-21.json',

sample_size=-1);

I'll then extract both tables' records into a single table. I'll add a downloaded_at field so their source is distinguishable.

CREATE OR REPLACE TABLE ipinfo_ips AS

SELECT *,

'2023-03-06'::DATE downloaded_at

FROM ipinfo_country_asn_20230306;

INSERT INTO ipinfo_ips

SELECT *,

'2023-03-27'::DATE downloaded_at

FROM ipinfo_country_asn_20230327;

INSERT INTO ipinfo_ips

SELECT *,

'2023-05-09'::DATE downloaded_at

FROM ipinfo_country_asn_20230509;

INSERT INTO ipinfo_ips

SELECT *,

'2023-05-21'::DATE downloaded_at

FROM ipinfo_country_asn_20230521;

In a 21-day period in March, Asia saw a net increase of 6.5M useable IPv4 addresses.

SELECT continent_name,

SUM(num_ips) FILTER (downloaded_at='2023-03-27')::int128 -

SUM(num_ips) FILTER (downloaded_at='2023-03-06')::int128 delta

FROM ipinfo_ips

WHERE is_ipv4

GROUP BY 1

ORDER BY 2 DESC;

┌────────────────┬──────────┐

│ continent_name │ delta │

│ varchar │ int128 │

├────────────────┼──────────┤

│ Asia │ 6523593 │

│ South America │ -231716 │

│ Africa │ -335421 │

│ Europe │ -436671 │

│ Oceania │ -633587 │

│ North America │ -3266988 │

└────────────────┴──────────┘

The top net-buyers organisations of IPv4s in March were Chinese and the top net-sellers were American.

.maxrows 15

SELECT country_name,

SUM(num_ips) FILTER (downloaded_at='2023-03-27')::int128 -

SUM(num_ips) FILTER (downloaded_at='2023-03-06')::int128 delta

FROM ipinfo_ips

WHERE is_ipv4

GROUP BY 1

ORDER BY 2 DESC;

┌────────────────┬──────────┐

│ country_name │ delta │

│ varchar │ int128 │

├────────────────┼──────────┤

│ China │ 5716966 │

│ Hong Kong │ 322896 │

│ Israel │ 198306 │

│ Argentina │ 162618 │

│ Netherlands │ 161754 │

│ Iran │ 156961 │

│ India │ 156342 │

│ Colombia │ 142934 │

│ · │ · │

│ · │ · │

│ · │ · │

│ Germany │ -191119 │

│ Canada │ -218411 │

│ United Kingdom │ -339791 │

│ Mauritius │ -541533 │

│ Australia │ -565282 │

│ Uruguay │ -666604 │

│ United States │ -3094670 │

├────────────────┴──────────┤

│ 243 rows (15 shown) │

└───────────────────────────┘

These were the top net-sellers in March.

SELECT as_domain,

SUM(num_ips) FILTER (downloaded_at='2023-03-27')::int128 -

SUM(num_ips) FILTER (downloaded_at='2023-03-06')::int128 delta

FROM ipinfo_ips

WHERE is_ipv4

GROUP BY 1

ORDER BY 2 NULLS LAST

LIMIT 10;

┌─────────────────────┬───────────┐

│ as_domain │ delta │

│ varchar │ int128 │

├─────────────────────┼───────────┤

│ tsinghua.edu.cn │ -13352824 │

│ chinatelecom.com.cn │ -3248787 │

│ etisalat.ae │ -2384968 │

│ claro.com.co │ -2099465 │

│ telefonica.com │ -1938125 │

│ 10086.cn │ -1403341 │

│ att.com │ -1097554 │

│ jastel.co.th │ -1042799 │

│ dodiis.mil │ -917504 │

│ bta.net.cn │ -872924 │

├─────────────────────┴───────────┤

│ 10 rows 2 columns │

└─────────────────────────────────┘

These were the top net-buyers in March.

SELECT as_domain,

SUM(num_ips) FILTER (downloaded_at='2023-03-27')::int128 -

SUM(num_ips) FILTER (downloaded_at='2023-03-06')::int128 delta

FROM ipinfo_ips

WHERE LENGTH(as_domain)

AND is_ipv4

GROUP BY 1

ORDER BY 2 DESC NULLS LAST

LIMIT 10;

┌──────────────────┬──────────┐

│ as_domain │ delta │

│ varchar │ int128 │

├──────────────────┼──────────┤

│ cernet.edu.cn │ 13361804 │

│ chinatelecom.cn │ 7074645 │

│ cei.gov.cn │ 4128768 │

│ chinaunicom.cn │ 2855128 │

│ mail.mil │ 1443589 │

│ homeplus.net.tw │ 1185762 │

│ chinamobile.com │ 1053041 │

│ vodafoneidea.com │ 704236 │

│ pccwglobal.com │ 516529 │

│ gtt.net │ 418549 │

├──────────────────┴──────────┤

│ 10 rows 2 columns │

└─────────────────────────────┘

Without iterating through all 3B+ IPv4s, I'll attempt to get a rough idea of which countries have sold IPv4s to one another over a 12-day period this month.

COPY (

SELECT a.country source,

b.country target,

sum(a.num_ips)::uint64 AS value

FROM ipinfo_ips a

LEFT JOIN ipinfo_ips b ON a.start_ip = b.start_ip

WHERE a.downloaded_at = '2023-05-09'

AND b.downloaded_at = '2023-05-21'

AND a.country != b.country

AND a.is_ipv4

AND b.is_ipv4

GROUP BY 1, 2

ORDER BY 3 DESC

) TO 'links.json';

The above produced 1,644 country pairs totalling 10,941,802 IPv4s. Below is the first record.

$ head -n1 links.json | jq -S .

{

"source": "SE",

"target": "DK",

"value": 581460

}

I'll use eCharts to render a Sankey diagram that can display which countries are buying and selling with one another. I've created a top 50 limit so the chart remains readable.

$ python

import json

from pyecharts.charts import Sankey

import pyecharts.options as opts

def render(country='US', group_under=150_000):

links = [json.loads(x)

for x in open('links.json', 'r')

if json.loads(x)['source'] == country

or json.loads(x)['target'] == country]

# Cyclical DAGs are unsupported by eCharts's Sankey chart. Prefix

# with the side of the deal the non-subject country is on.

links2 = []

for rec in links:

if rec['source'] != country:

if rec['value'] < group_under:

rec['source'] = 'Other sellers'

else:

rec['source'] = rec['source'] + ' sellers'

if rec['target'] != country:

if rec['value'] < group_under:

rec['target'] = 'Other buyers'

else:

rec['target'] = rec['target'] + ' buyers'

links2.append(rec)

links = links2

nodes = [{'name': y}

for y in set([x['source'] for x in links] +

[x['target'] for x in links])]

itemstyle_opts = opts.ItemStyleOpts(border_width=1,

border_color='#aaa')

linestyle_opt = opts.LineStyleOpts(color='source',

curve=0.5,

opacity=0.5)

Sankey(init_opts=opts.InitOpts(width='400px',

height='300px')).add(

series_name='',

nodes=nodes,

links=links,

itemstyle_opts=itemstyle_opts,

linestyle_opt=linestyle_opt,

tooltip_opts=opts.TooltipOpts(trigger_on='mousemove'))\

.set_global_opts(title_opts=opts.TitleOpts(title=''))\

.set_dark_mode()\

.render('transfers.%s.html' % country)

render('US', group_under=50_000)

render('CN', group_under=1_000)

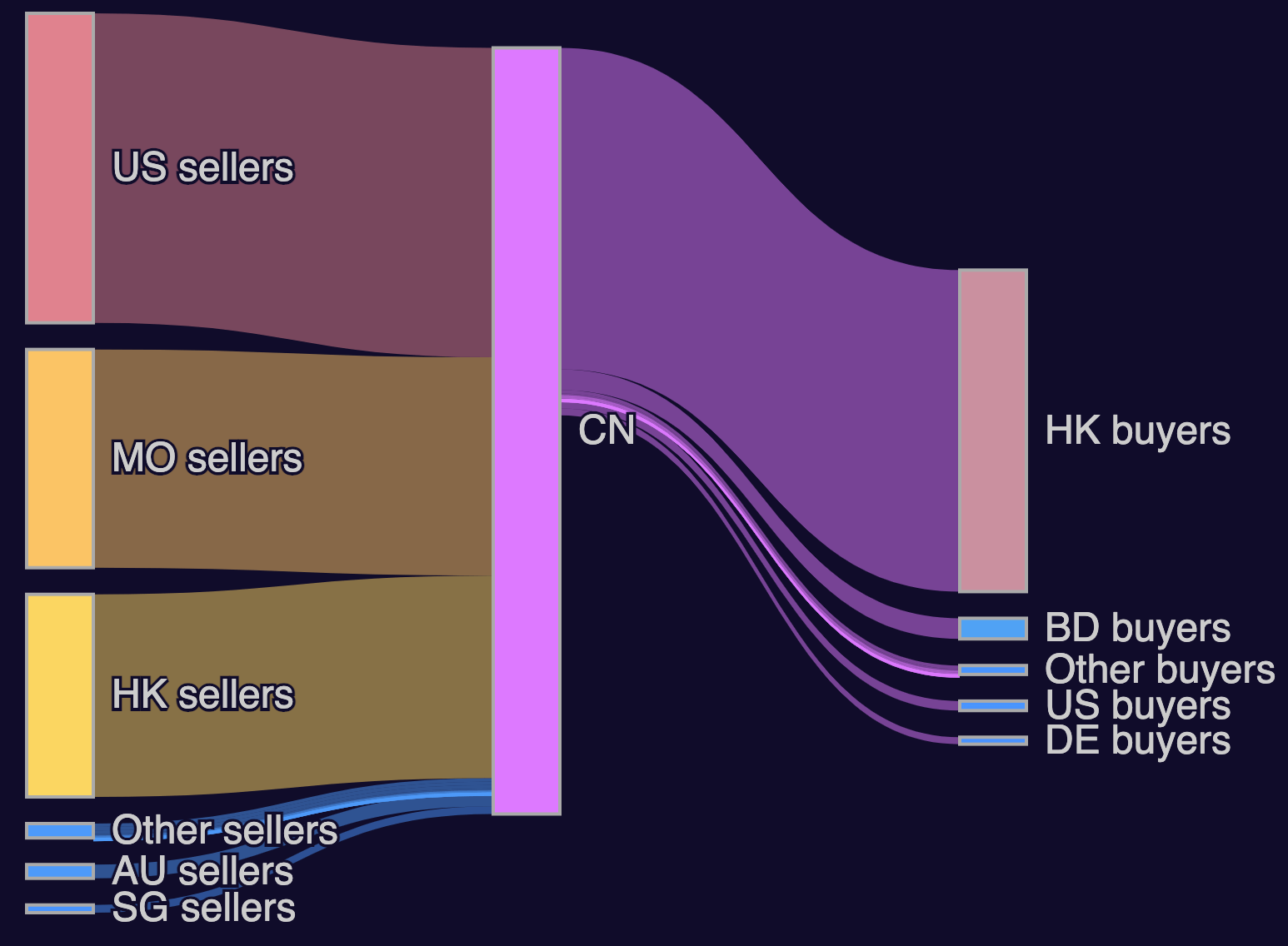

The US sold way more IPv4s than it bought.

China accumulated far more IPv4s than it sold during the same time period.

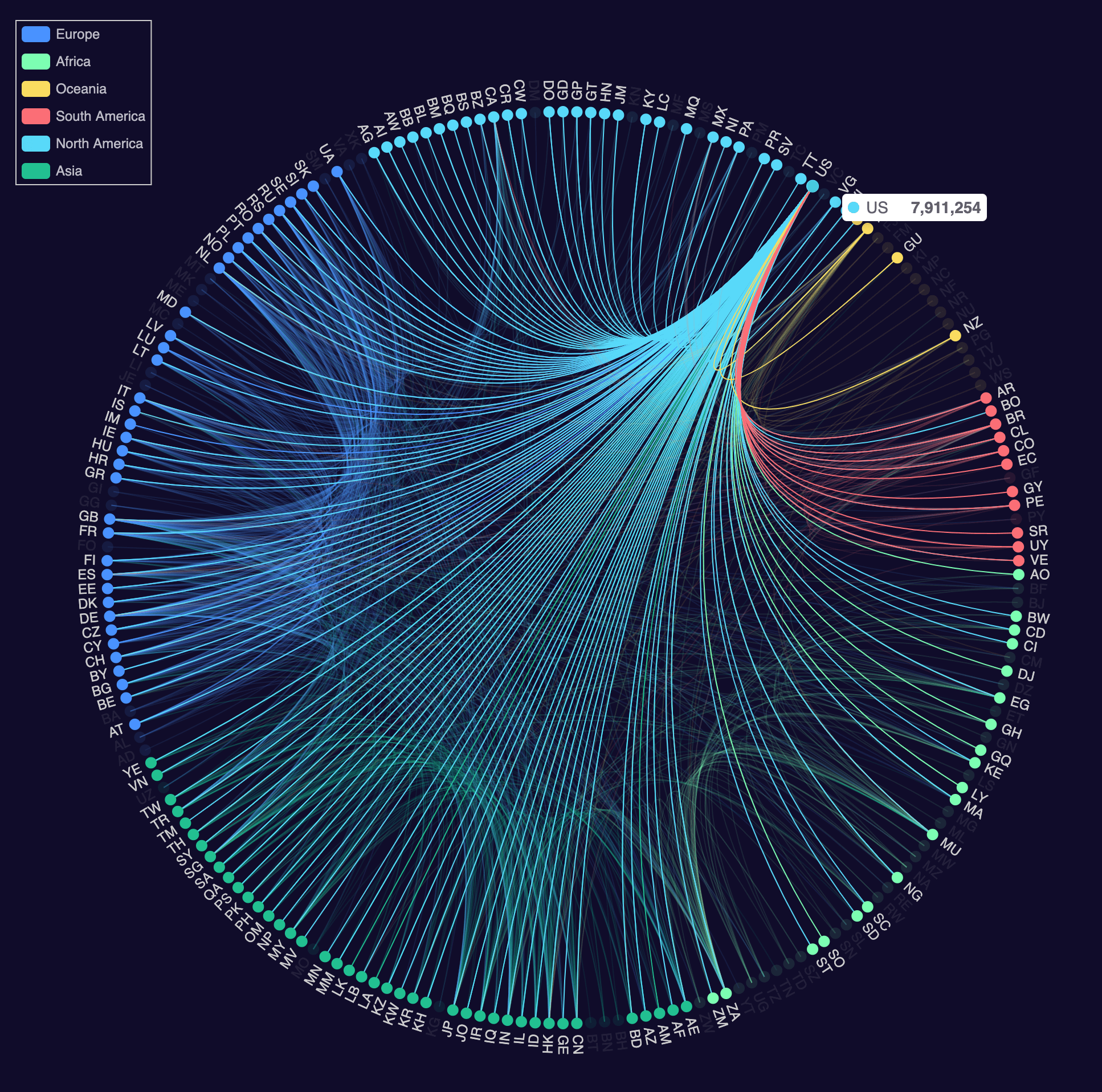

The US was a party in 16 of the top 25 trading pairs.

$ jq -c '[.value, .source, .target]' links.json \

| sed 's/[][]//g' \

| sort -rn \

| head -n25 \

| csvlook --no-header \

| tail -n+3

| 581,460 | SE | DK |

| 309,770 | US | GB |

| 191,127 | US | MY |

| 182,073 | US | IN |

| 130,018 | US | AE |

| 117,866 | US | IL |

| 103,883 | DE | GB |

| 87,389 | DE | US |

| 82,433 | IN | GB |

| 74,706 | US | SG |

| 72,857 | CA | US |

| 67,084 | IT | US |

| 65,795 | US | BD |

| 65,536 | SK | NL |

| 65,328 | BE | DE |

| 65,152 | JP | EG |

| 59,841 | US | NL |

| 57,463 | US | IT |

| 55,785 | US | BR |

| 55,299 | HK | MU |

| 47,897 | CN | HK |

| 47,306 | US | HK |

| 46,100 | US | CN |

| 43,125 | US | CA |

| 41,974 | BE | GB |

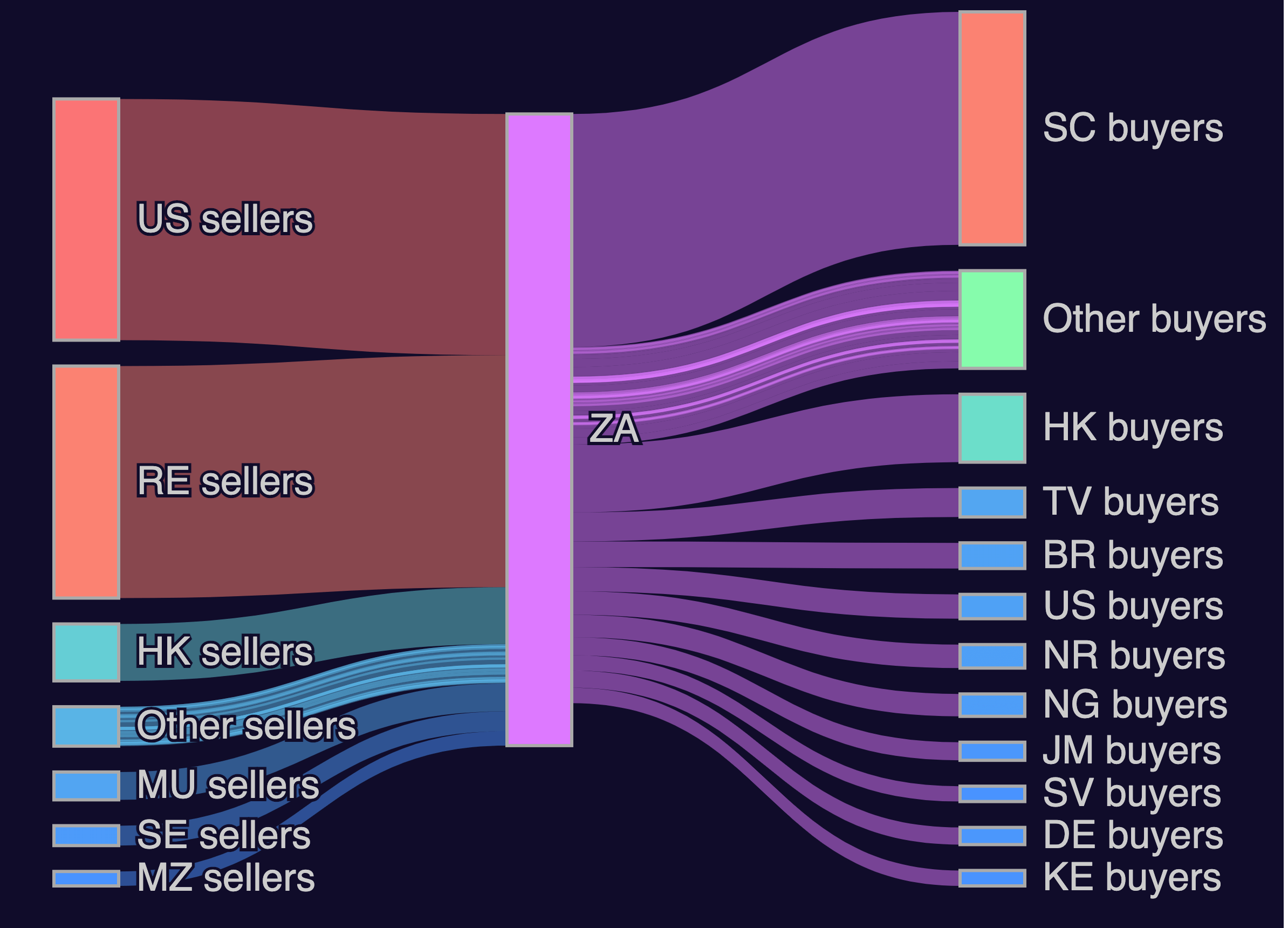

South Africa, despite owning less than 1% of the world's IPv4 addresses, ranked 6th out of 206 in terms of IPv4 trading partner country count for March of this year. The ranking was achieved by the work of 70 different firms and organisations with Liquid Telecom involved in 20% of the blocks being transferred.

COPY (

SELECT a.country source,

b.country target,

sum(a.num_ips)::uint64 AS value

FROM ipinfo_ips a

LEFT JOIN ipinfo_ips b ON a.start_ip = b.start_ip

WHERE a.downloaded_at = '2023-03-06'

AND b.downloaded_at = '2023-03-27'

AND a.country != b.country

AND a.is_ipv4

AND b.is_ipv4

GROUP BY 1, 2

ORDER BY 3 DESC

) TO 'links.json';

$ (jq -c '[.source]' links.json;

jq -c '[.target]' links.json) \

| grep -o '[A-Z]*' \

| sort \

| uniq -c \

| sort -rn \

| head

227 US

124 GB

113 DE

106 NL

82 FR

77 ZA

75 IN

70 SG

70 ES

68 RU

render('ZA', group_under=3_000)

Though the bulk of IPv4 trade is the US selling to Asian countries, most countries trade with many partners, however small their deals are. Below I've highlighted the countries trading with the US over the 21 days in March.

$ duckdb deals.duckdb

COPY (

SELECT a.country source,

a.continent_name source_continent_name,

b.country target,

b.continent_name target_continent_name,

sum(a.num_ips)::uint64 AS value

FROM ipinfo_ips a

LEFT JOIN ipinfo_ips b ON a.start_ip = b.start_ip

WHERE a.downloaded_at = '2023-03-06'

AND b.downloaded_at = '2023-03-27'

AND a.country != b.country

AND a.is_ipv4

AND b.is_ipv4

GROUP BY 1, 2, 3, 4

ORDER BY 2, 1

) TO 'links_with_continents.json';

$ python

import json

from operator import itemgetter

from pyecharts.charts import Graph

import pyecharts.options as opts

data = [json.loads(x)

for x in open('links_with_continents.json', 'r')]

categories = [{'name': y} for y in

set([x['source_continent_name'] for x in data] +

[x['target_continent_name'] for x in data])]

countries = [y for y in

set([(x['source'],

x['source_continent_name']) for x in data] +

[(x['target'],

x['target_continent_name']) for x in data])]

countries = sorted(countries, key=itemgetter(1, 0))

country_ids = [x[0] for x in countries]

amounts = {}

for x in data:

if x['source'] in amounts.keys():

amounts[x['source']] = amounts[x['source']] + x['value']

else:

amounts[x['source']] = x['value']

if x['target'] in amounts.keys():

amounts[x['target']] = amounts[x['target']] + x['value']

else:

amounts[x['target']] = x['value']

nodes = [{'id': country_id,

'name': country_name,

'value': amounts[country_name],

'label': {'normal': {'show': True}},

'category': categories.index({'name': continent_name})}

for country_id, (country_name,

continent_name) in enumerate(countries)]

links = [{'id': row_num,

'source': country_ids.index(x['source']),

'target': country_ids.index(x['target'])}

for row_num, x in enumerate(data)]

Graph(init_opts=opts.InitOpts(width='1000px',

height='1000px')).add(

'',

nodes=nodes,

links=links,

categories=categories,

layout='circular',

is_rotate_label=True,

linestyle_opts=opts.LineStyleOpts(color='source', curve=0.3),

label_opts=opts.LabelOpts(position='right'))\

.set_global_opts(

title_opts=opts.TitleOpts(title=''),

legend_opts=opts.LegendOpts(

orient='vertical',

pos_left='2%',

pos_top='2%'))\

.set_dark_mode()\

.render('graph_circular.html')

Exploring IPv4 Ownership

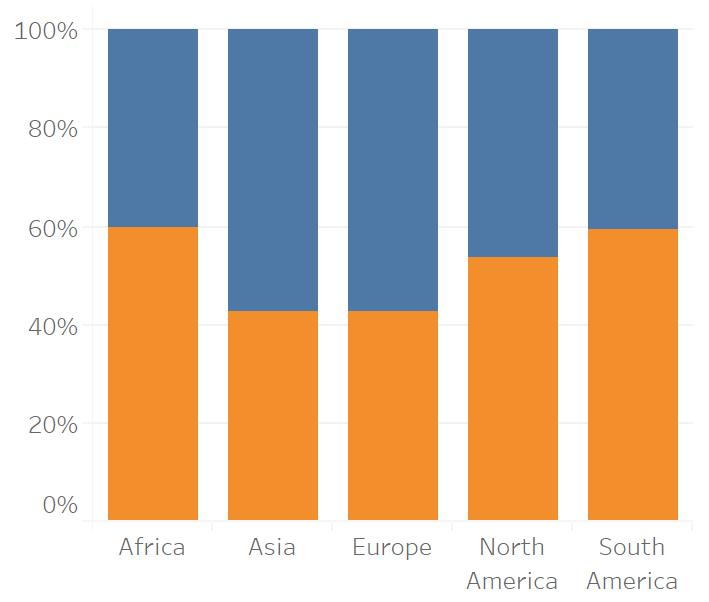

All five of the world's IPv4 registrars have between 40-60% of their IPv4s registered outside of their region of responsibility. Below in blue are allocations that are not in-region.

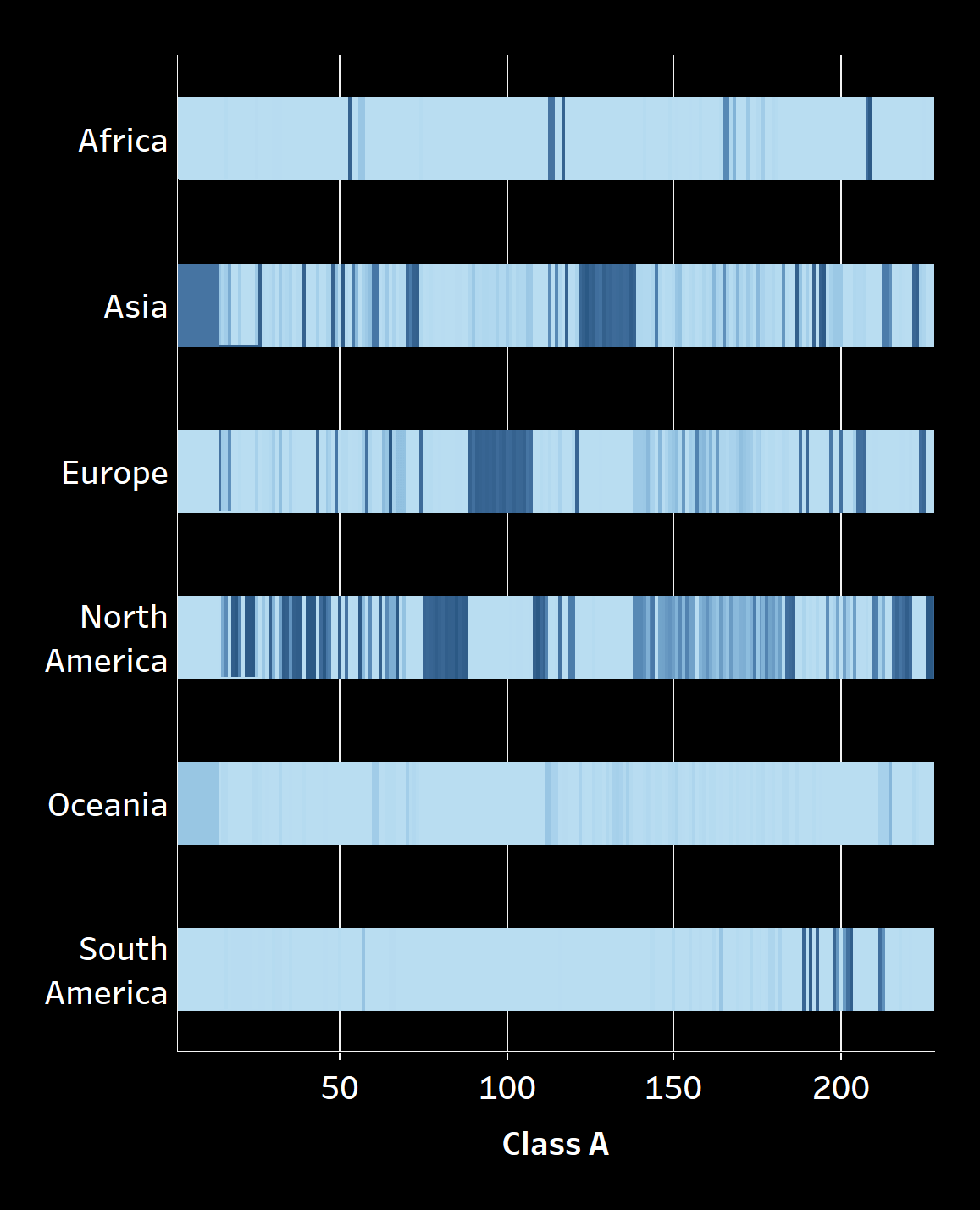

There is no clear numerical pattern as to where countries are allocated IPv4s across the spectrum. Below is the frequency of ownership across the IPv4 spectrum broken down by continent.

I've taken the top country of ownership in each class A of the IPv4 spectrum and counted how many times each country appears. There are 113 class As where the US is the dominant IPv4 owner followed by China with 37.

$ duckdb deals.duckdb

.mode column

WITH a AS (

SELECT class_a,

emoji,

sum(num_ips)::int64 num_ips,

row_number()

OVER (PARTITION BY class_a

ORDER BY sum(num_ips) DESC) row_num

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND is_ipv4

GROUP BY 1, 2)

SELECT emoji,

COUNT(*) AS num_class_a

FROM a

WHERE row_num = 1

GROUP BY 1

ORDER BY 2 DESC;

emoji num_class_a

----- -----------

🇺🇸 114

🇨🇳 35

🇩🇪 17

🇯🇵 10

🇬🇧 8

🇫🇷 7

🇧🇷 7

🇿🇦 4

🇮🇹 3

🇪🇸 2

🇦🇷 2

🇷🇺 2

🇨🇦 1

🇲🇽 1

🇮🇷 1

🇸🇬 1

🇨🇭 1

🇦🇺 1

🇰🇷 1

🇳🇱 1

🇲🇺 1

🇮🇳 1

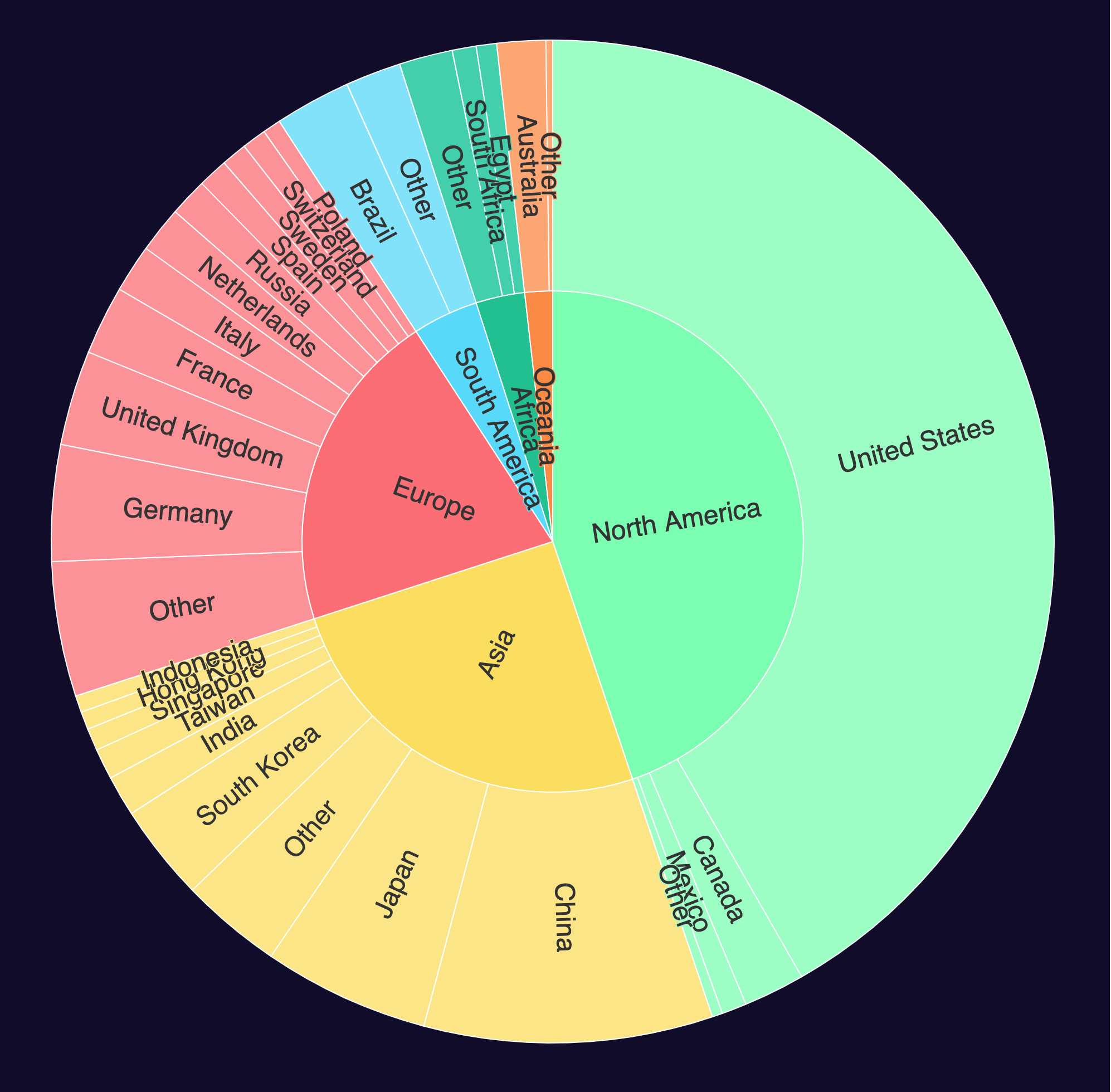

At present, less than 10% of the world's IPv4 addresses are owned by countries in Africa, South America and Oceania. Of these three continents, there are four countries which hold half of their IPv4s.

COPY (

SELECT continent_name,

country_name,

sum(num_ips)::uint64 AS value

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND is_ipv4

GROUP BY 1, 2

ORDER BY 1, 3 DESC

) TO 'country_ipv4_inventories.json';

$ python

import json

from pyecharts.charts import Sunburst

import pyecharts.options as opts

def render_sunburst(source, group_under=20_000_000):

inventories = {}

for line in open(source, 'r'):

rec = json.loads(line)

if rec['continent_name'] not in inventories.keys():

inventories[rec['continent_name']] = {}

country_name = rec['country_name'] \

if rec['value'] > group_under else 'Other'

if country_name in inventories[rec['continent_name']].keys():

inventories[rec['continent_name']][country_name] = \

inventories[rec['continent_name']]\

[country_name] + rec['value']

else:

inventories[rec['continent_name']]\

[country_name] = rec['value']

data = [opts.SunburstItem(

name=continent_name,

children=[

opts.SunburstItem(

name=country_name,

value=inventories[continent_name]

[country_name])

for country_name in inventories[continent_name].keys()])

for continent_name in inventories.keys()]

Sunburst(init_opts=opts.InitOpts(width='1000px',

height='1000px'))\

.add(series_name='',

data_pair=data,

radius=[0, '90%'])\

.set_global_opts(title_opts=opts.TitleOpts(title=''))\

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}",

font_size=24))\

.set_dark_mode()\

.render('%s.ipv4_ownership.html' % source)

render_sunburst('country_ipv4_inventories.json')

Brazil owns 89 million IPv4s with 70% of these belonging to four firms.

SELECT as_domain,

sum(num_ips)::uint64 num_ips

FROM ipinfo_ips

WHERE country = 'BR'

AND downloaded_at = '2023-05-21'

AND is_ipv4

GROUP BY 1

ORDER BY 2 DESC

LIMIT 4;

┌───────────────────┬──────────┐

│ as_domain │ num_ips │

│ varchar │ uint64 │

├───────────────────┼──────────┤

│ telefonica.com.br │ 22333432 │

│ claro.com.br │ 16791498 │

│ vtal.com │ 11861358 │

│ tim.com.br │ 10232320 │

└───────────────────┴──────────┘

Those four firms own more than half of South America's 151M IPv4s. The top holder Telefonica, despite being headquartered in Spain, has almost 5x more IPv4s registered in South America than they do in Europe.

SELECT SPLIT_PART(as_domain, '.', 1) domain_stub,

sum(num_ips)::uint64 num_ips

FROM ipinfo_ips

WHERE continent = 'SA'

AND downloaded_at = '2023-05-21'

AND LENGTH(domain_stub)

AND is_ipv4

GROUP BY 1

ORDER BY 2 DESC

LIMIT 4;

┌─────────────┬──────────┐

│ domain_stub │ num_ips │

│ varchar │ uint64 │

├─────────────┼──────────┤

│ telefonica │ 31879936 │

│ claro │ 21444715 │

│ vtal │ 11861742 │

│ tim │ 10232320 │

└─────────────┴──────────┘

In March, India owned roughly half the number of IPv4s as South Korea, a country with 27x fewer inhabitants, but IPv6 has taken off in India and they had the 10th largest pool of IPv6 addresses in the world that month.

SELECT country,

SUM(num_ips)::int128 AS num_ips

FROM ipinfo_ips

WHERE downloaded_at = '2023-03-27'

AND NOT is_ipv4

GROUP BY 1

ORDER BY 2 DESC

LIMIT 10;

┌─────────┬────────────────────────────────────┐

│ country │ num_ips │

│ varchar │ int128 │

├─────────┼────────────────────────────────────┤

│ CN │ 2088490354094576258333937435148288 │

│ US │ 1713863955753218552117288597716992 │

│ DE │ 1322211361625902111950014519443456 │

│ JP │ 620670958376498712316317603463168 │

│ GB │ 613304728389492504142635929174016 │

│ AU │ 530269836335129241226873734692864 │

│ IT │ 523839146960659078484043403100160 │

│ NL │ 518685716056336940264234925686784 │

│ BR │ 495570585164422898369737516908544 │

│ IN │ 443307870479945933230970004045824 │

├─────────┴────────────────────────────────────┤

│ 10 rows 2 columns │

└──────────────────────────────────────────────┘

104.0.0.0/8 is home to the largest number of countries of any class A.

SELECT class_a,

COUNT(DISTINCT country) AS num_countries

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND is_ipv4

GROUP BY 1

ORDER BY 2 desc

LIMIT 10;

┌─────────┬───────────────┐

│ class_a │ num_countries │

│ uint64 │ int64 │

├─────────┼───────────────┤

│ 104 │ 242 │

│ 172 │ 236 │

│ 146 │ 234 │

│ 163 │ 219 │

│ 140 │ 210 │

│ 196 │ 200 │

│ 57 │ 196 │

│ 136 │ 180 │

│ 45 │ 151 │

│ 185 │ 146 │

├─────────┴───────────────┤

│ 10 rows 2 columns │

└─────────────────────────┘

Cloudflare has a presence in more countries than any other organisation.

SELECT as_domain,

COUNT(DISTINCT country_name) num_countries

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND LENGTH(as_domain)

AND is_ipv4

GROUP BY 1

ORDER BY 2 DESC

LIMIT 10;

┌────────────────┬───────────────┐

│ as_domain │ num_countries │

│ varchar │ int64 │

├────────────────┼───────────────┤

│ cloudflare.com │ 242 │

│ akamai.com │ 236 │

│ fastly.com │ 235 │

│ netskope.com │ 218 │

│ google.com │ 197 │

│ fibergride.com │ 195 │

│ orange.com │ 157 │

│ pch.net │ 102 │

│ iweb.com │ 98 │

│ m247.com │ 95 │

├────────────────┴───────────────┤

│ 10 rows 2 columns │

└────────────────────────────────┘

The Vatican, the smallest country in the world by landmass, has 32,461 IPv4s at its disposal.

SELECT as_domain,

sum(num_ips)::uint64 num_ips

FROM ipinfo_ips

WHERE country_name = 'Vatican'

AND downloaded_at = '2023-03-27'

AND is_ipv4

GROUP BY 1

ORDER BY 2 DESC;

┌───────────────────┬─────────┐

│ as_domain │ num_ips │

│ varchar │ uint64 │

├───────────────────┼─────────┤

│ sprint.net │ 16128 │

│ vaticanstate.va │ 9088 │

│ att.com │ 4096 │

│ fibergride.com │ 1280 │

│ spc.va │ 1024 │

│ vaticanlibrary.va │ 512 │

│ scalaxy.com │ 256 │

│ akamai.com │ 80 │

│ cloudflare.com │ 14 │

│ fastly.com │ 8 │

│ google.com │ 2 │

│ comcast.com │ 1 │

├───────────────────┴─────────┤

│ 12 rows 2 columns │

└─────────────────────────────┘

Pitcairn, the smallest country by population in the world, had five useable IPv4s in total before SpaceX came along.

SELECT as_domain,

asn,

country_name,

start_ip,

end_ip

FROM ipinfo_ips

WHERE country_name = 'Pitcairn'

AND is_ipv4

AND downloaded_at = '2023-05-21';

┌────────────────┬─────────┬──────────────┬────────────────┬────────────────┐

│ as_domain │ asn │ country_name │ start_ip │ end_ip │

│ varchar │ varchar │ varchar │ varchar │ varchar │

├────────────────┼─────────┼──────────────┼────────────────┼────────────────┤

│ spacex.com │ AS14593 │ Pitcairn │ 206.83.127.128 │ 206.83.127.255 │

│ spacex.com │ AS14593 │ Pitcairn │ 65.181.30.0 │ 65.181.30.63 │

│ cloudflare.com │ AS13335 │ Pitcairn │ 104.28.12.135 │ 104.28.12.137 │

│ google.com │ AS36492 │ Pitcairn │ 136.23.3.231 │ 136.23.3.231 │

│ google.com │ AS36492 │ Pitcairn │ 136.23.11.199 │ 136.23.11.199 │

└────────────────┴─────────┴──────────────┴────────────────┴────────────────┘

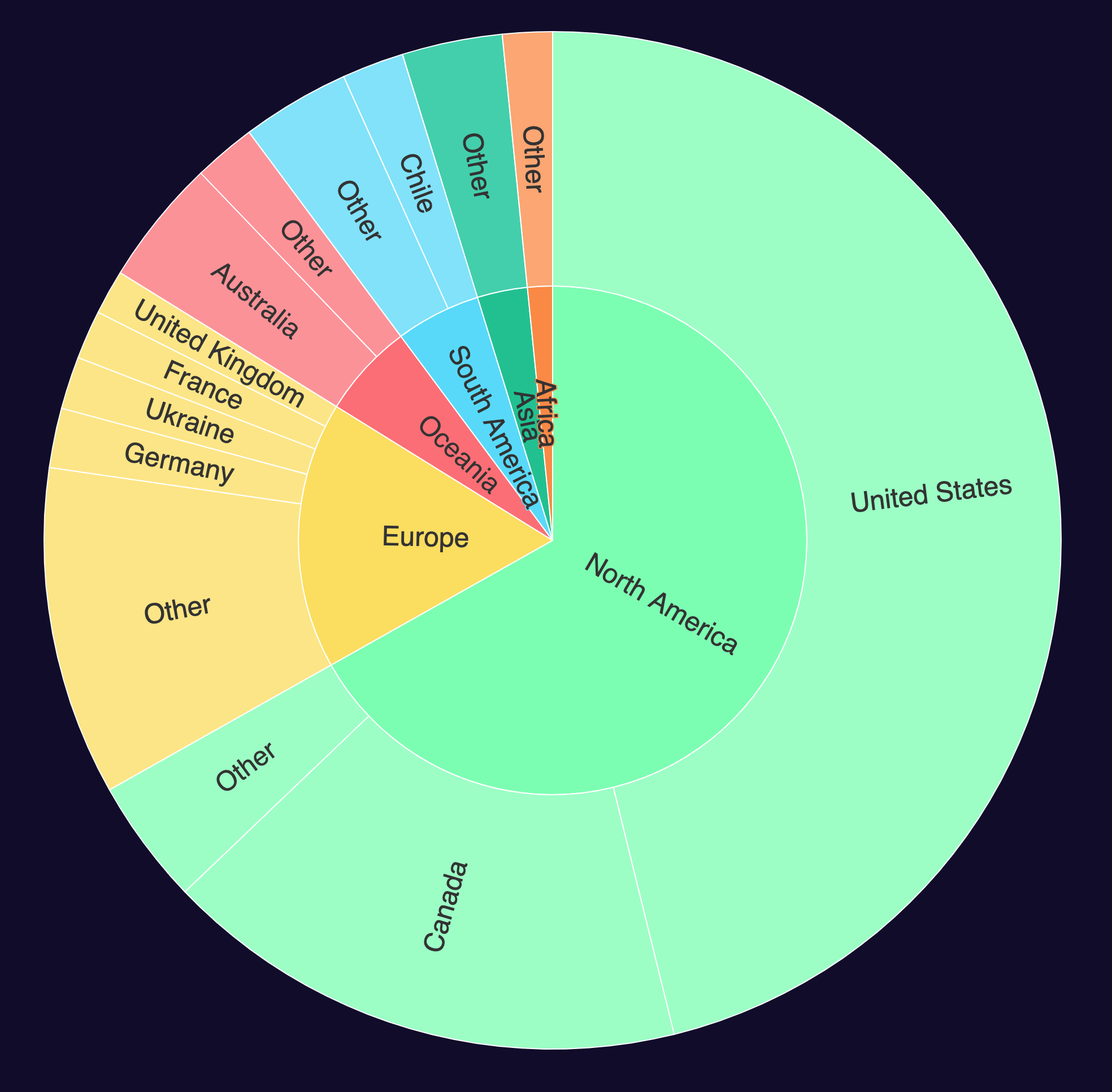

SpaceX now have IPv4s in 81 countries on six continents.

COPY (

SELECT continent_name,

country_name,

sum(num_ips)::uint64 AS value

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND as_domain = 'spacex.com'

AND is_ipv4

GROUP BY 1, 2

ORDER BY 1, 3 DESC

) TO 'spacex_ipv4.json';

render_sunburst('spacex_ipv4.json', 3000)

Almost half of Amazon's IPv4s are now registered outside of the US.

COPY (

SELECT continent_name,

country_name,

sum(num_ips)::uint64 AS value

FROM ipinfo_ips

WHERE downloaded_at = '2023-05-21'

AND as_domain = 'amazon.com'

AND is_ipv4

GROUP BY 1, 2

ORDER BY 1, 3 DESC

) TO 'amazon_ipv4.json';

render_sunburst('amazon_ipv4.json', group_under=800_000)